AFTERIMAGE_Final Project

By Deren Guler & Luke Loeffler

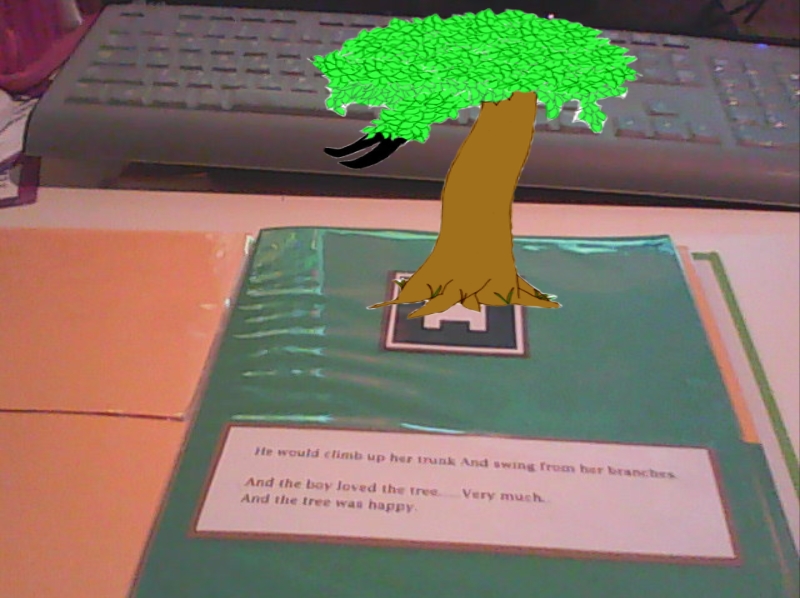

AFTERIMAGE transforms your iPad into an autosterecopic time-shifting display. The app records 26 frames, which are interlaced and viewed through a lenticular sheet, allowing you to tilt the screen back and forth (or close one eye and then the other) to see a 3D animated image.

We sought to create some sort of tangible interaction with 3D images, and use it as a tool to create some virtual/real effect that altered our perception. While looking for more techniques Deren came across lenticular imaging and remembered all of the awesome cards and posters from her childhood. A lenticular image is basically a composite of several images splices together with a lenticular sheet placed above them that creates different effects when viewed from different angles.

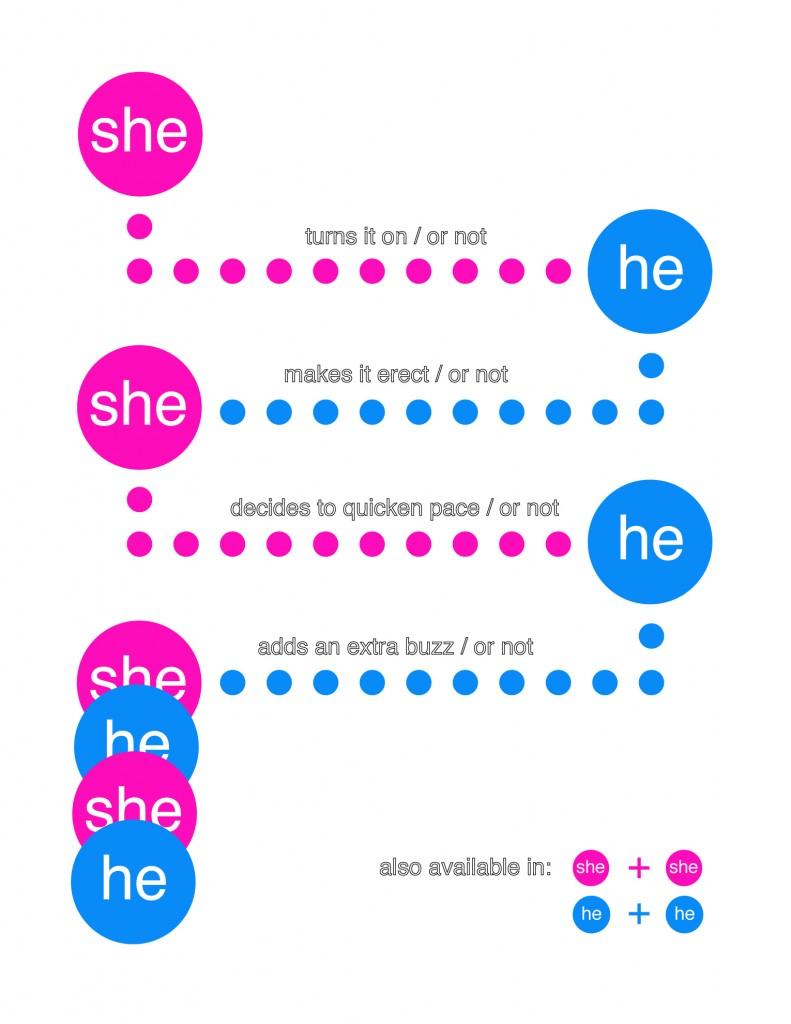

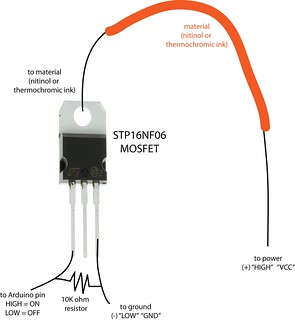

The images are interleaved in openFrameworks following this basic model (from Paul Bourke):

Deren was thinking about making some sort of realtime projector displaying using a camera and a short-throw projector, and then she talked to Luke and he suggested making an iPad app. His project was to make an interactive iPad app that makes you see the iPad differently, so there was a good overlap and we decided to team up. We started thinking about the advantages of using an iPad, and how the lenticular sheet could be attached to the device.

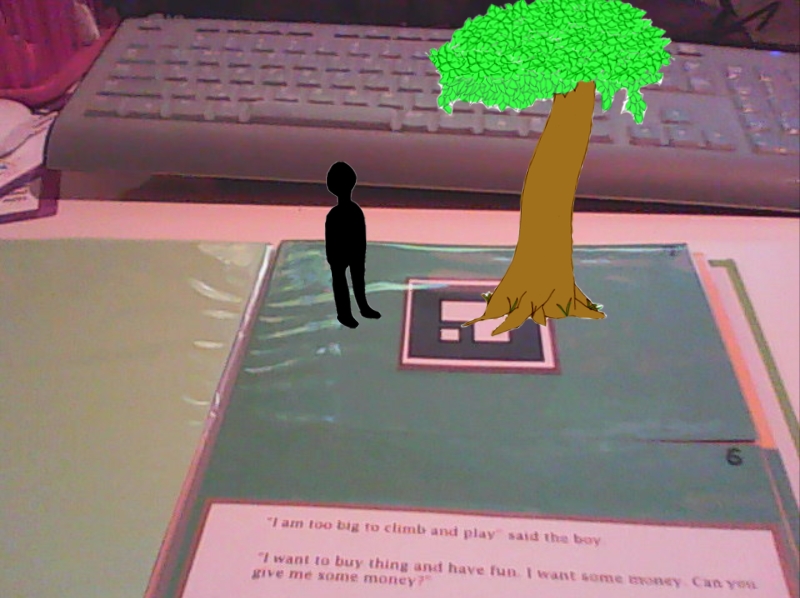

The first version took 2 views: the current camera feed and the camera feed from 5 seconds ago and showed them as an animated gif video. We wanted to see if seeing the past and present at the same time would create an interesting effect, maybe something to the effect of Camille Utterback’s Shifting Time: http://camilleutterback.com/projects/shifting-time-san-jose/

The result was neat, but didn’t work very well and wasn’t the most engaging app. Here is a video of feedback from the first take: afterimage take1

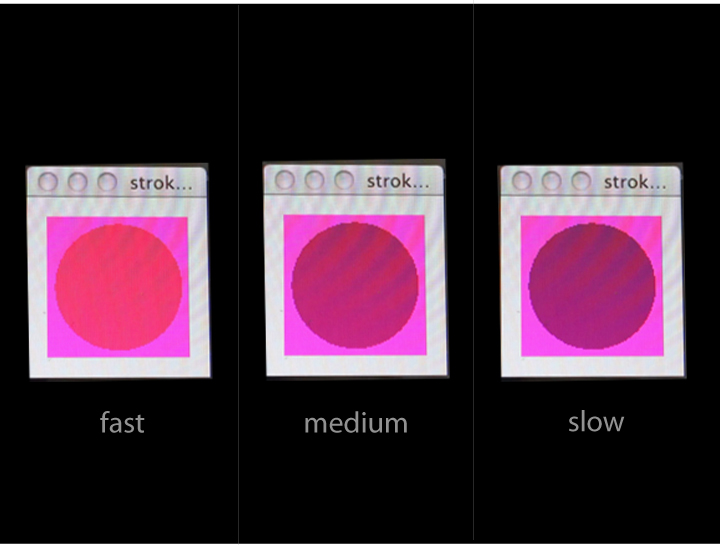

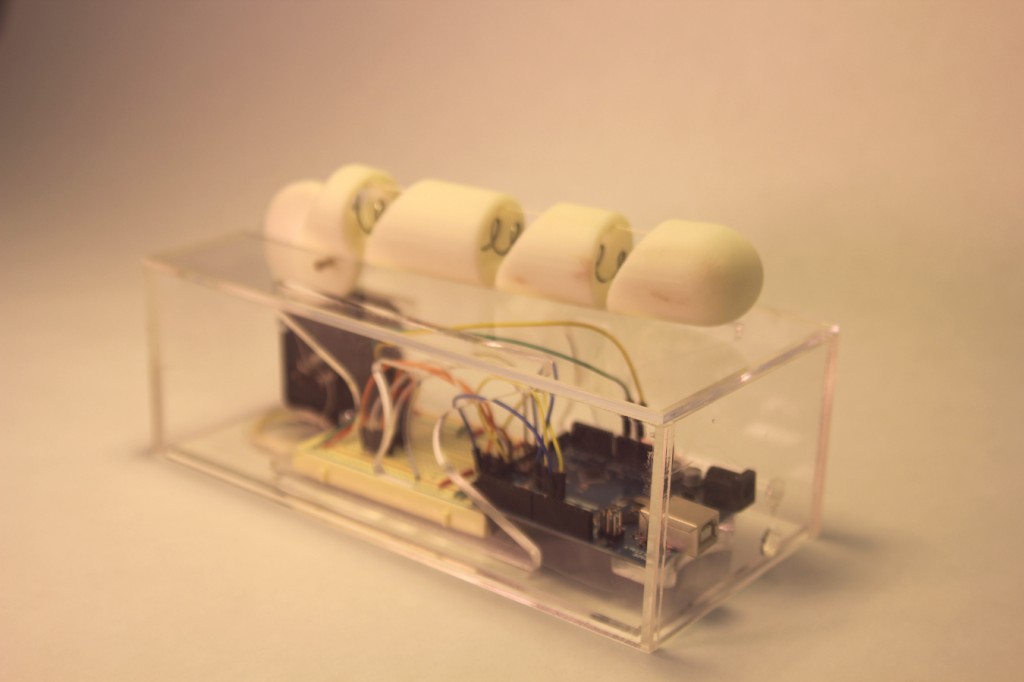

Then we decided to create a more isolated experience that you can record and developed the idea behind Afterimage. The new version transforms your iPad into an autosterecopic time shifting display. The app uses either the rear or front facing camera on the iPad to record 26 frames at a time. The frames are then interlaced and viewed through the lenticular sheet that has a pitch of 10 lines per inch. A touch interface allows you to simply slide along the bottom of the screen to record video. You can rerecord each segment by placing your finger on that frame and capturing a new image. When you are satisfied with your image series you can tilt the screen back and forth (or close one eye and then the other) to see a 3D animated gif.

Afterimage takes the iPad, a new and exciting computational tool and combines it with one of the first autosterescopic viewing techniques to create an interactive unencumbered autostereoscopic display.

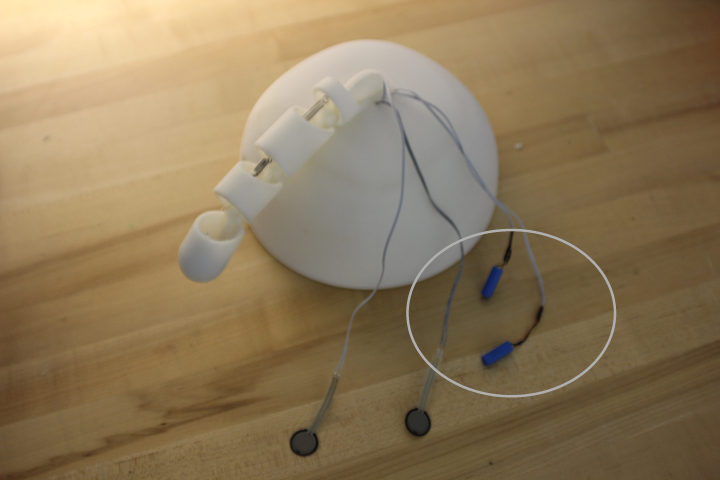

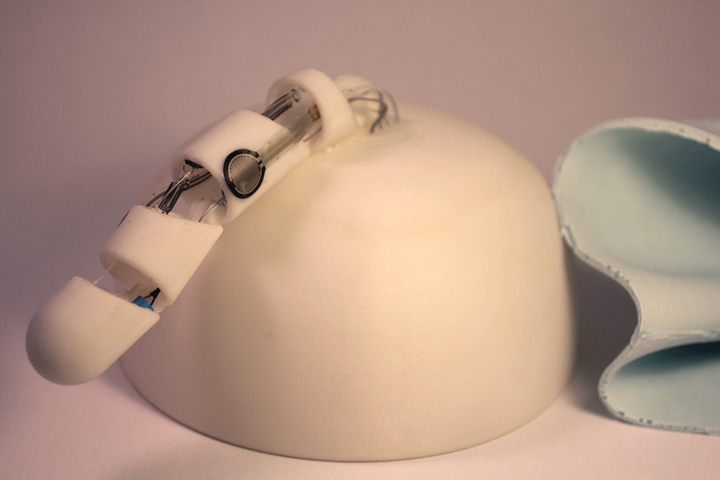

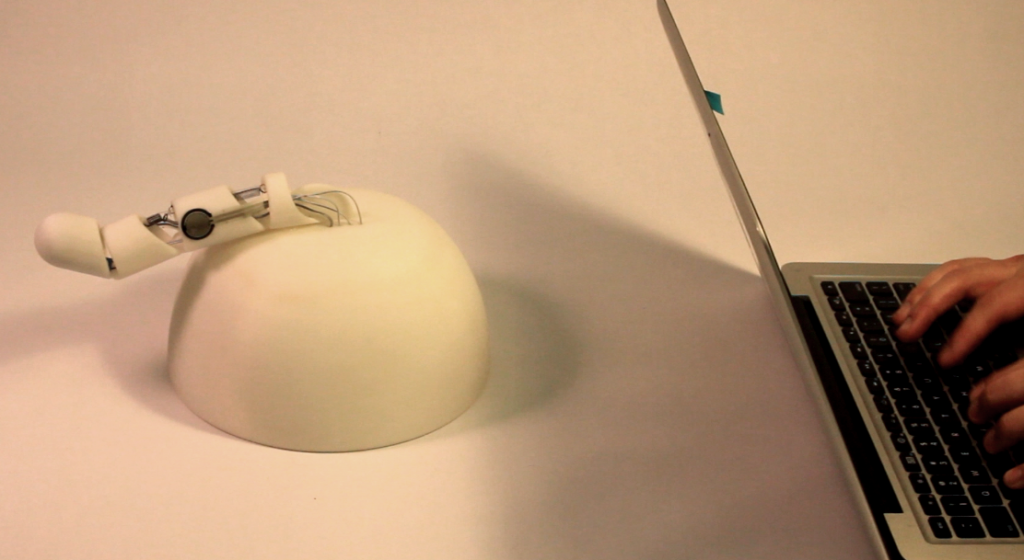

We experimented with different ways to attach the lenticular sheet to the ipad and decided that it would be best to make something that snaps on magnetically, like the existing screen protectors. Since the iPad would be moving around a lot we didn’t want to add too much bulkiness. We cut an acrylic frame around the screen that and placed the lenticular sheet on this frame. This way the sheet is held in place in a fairly secure way and the user can comfortable grab the edges and swing it around.

Though the result is fun to play with, though we are not sure what the future directions may be in terms of adding this to a pre-existing process or tool for iPad users.

We also added a record feature that allows to swipe across the bottom of the iPad screen to capture different frames and the “play them back” by tilting the screen back and forth. This seemed to work better than the past/realtime movie, especially when the images were fairly similar.