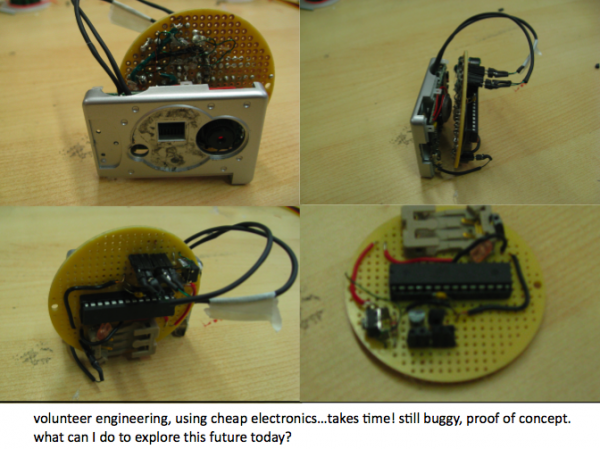

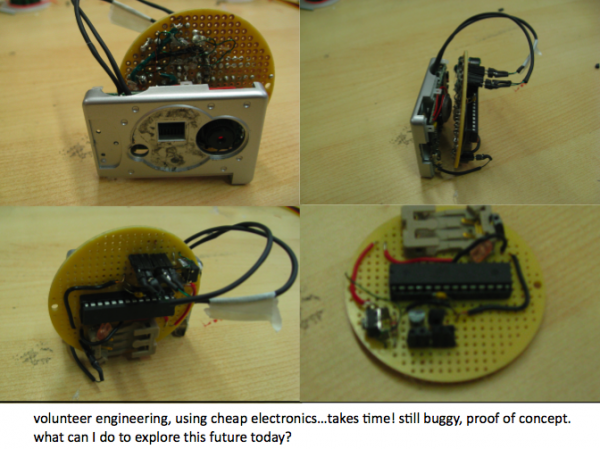

For my final project, I hope to continue my work in urban computing and time-lapse photography. In particular, I’ve been working on creating a cheap, time-lapse camera that people could use to “sense” the world around them. The cost of such a device would be around $10, when all is said and done, and having multiple of these devices would open up options for public computing that people have not considered – a world where people are willing to deploy “personal, public” electronics throughout their environment with the same ubiquity, recyclability and reusability as paper.

That said, getting a camera to a usable state where one could seriously explore this future would take time. I’ve created a proof of concept camera, to test how seriously my idea can be taken today with open-hardware techniques – but the question remains, how do I explore what might be possible in the future using today’s technology? In the seven weeks of this project, it’s infeasible to linearly refine my open-source hardware to a usable state and THEN explore the possibilities of personal, public computing on that same hardware.

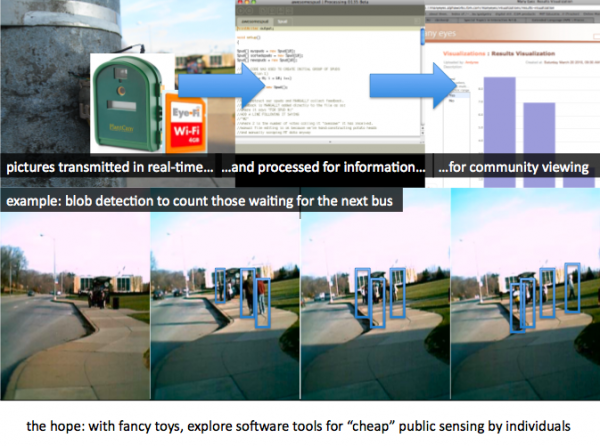

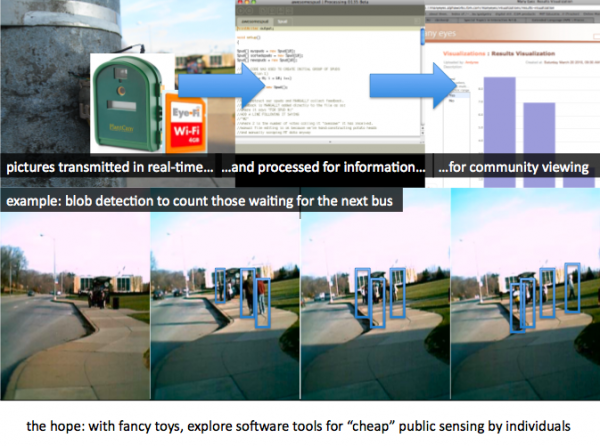

My solution? Cobble together existing off-the-shelf hardware to do the task for me. I hope to combine the Wingscapes Plantcam ($80) with the Eye-fi Wireless SD Card ($40), to give myself a slightly expensive “prototype” hardware platform for taking time-lapse photography in public spaces. I will then create a server application and website for time-lapse photo processing that, combined with the hardware, makes a reasonable case for why a cheap, open-hardware time-lapse photography kit makes sense for individuals in the community.

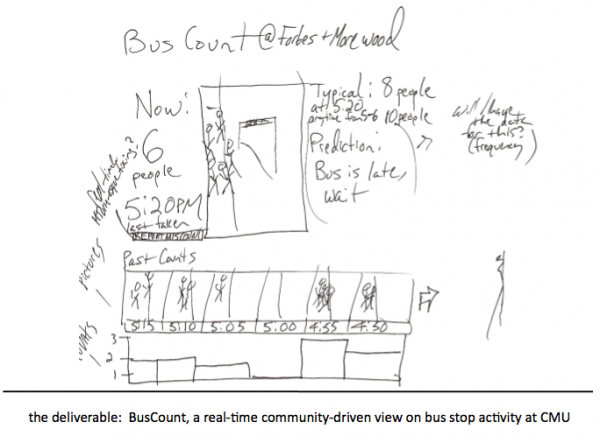

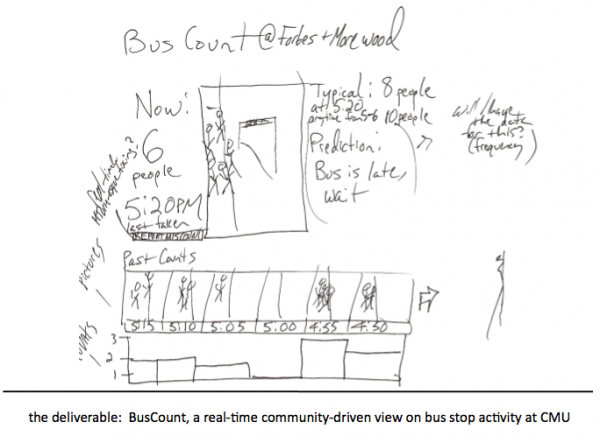

By the end of this class, I hope to have worked out the kinks in this prototype hardware setup, and successfully deployed a hardware and software setup that regularly counts people at the CMU bus stop and shares that information with commuters around CMU through a publicly available website. The thought is that people can safely assume the bus has already left if no one is waiting at the bus stop, and that the bus is running late and will arrive soon if more people than normal are waiting. A rough sketch of what such a website might feel like is below.

(Note: I reserve the right to rework this project as PamelasCount, or LaundryCount, or whatever allows me to complete a successful intervention in the time alloted that still taps into the “zeitgeist” of personal public computing.)

Development Plan:

Mon, March 22nd (Today, and My Birthday): Deliver this pitch. Done!

Thur, March 25th: Have hardware acquired and functional (PlantCam/Eye-fi). Spend lots of money to get things quickly. Try to get other projects interested in equipment to reimburse.

Wed, March 31st: Prove that pictures can be transmitted with PlantCam/Eye-fi to a local machine or Flickr service

Fri, April 2nd: Prove pictures can be transmitted over public wifi (CMU, Sq Hill)

Mon April 6th: Have blob detection working with “test photos” (taken without PlantCam/Eye-Fi)

Fri April 9th: Have blob detection work in real time with PlantCam/Eye-Fi photos.

Sat April 10th: Begin work on public-facing website.

Sun April 11th-Fri April 16th: CHI in Atlanta. Work on website during boring talks.

Freak out, recognize irony of being crunched to finish further work in Personal Public Computing because of presenting previous work in Personal Public Computing.

Fri April 16th: Plug real photos, blob detection data into public-facing website.

Mon April 19th: Present finished work, or beg for forgiveness/extension.