Project-1 The Smell of…

“The Smell of…” project is trying to visualize one of the five senses : smell. The way we used in this project is very simple: collecting the sentence in twitter by searching “what smells like” ,and then use the words to find the picture from flickr.

How would people describe a smell? For the negative part we have “evil-smelling, fetid, foul, foul-smelling, funky, high, malodorous, mephitic, noisome, olid, putrid, rancid, rank, reeking, stinking, strong, strong- smelling, whiffy…”; for the positive part we have “ambrosial, balmy, fragrant, odoriferous, perfumed, pungent, redolent, savory, scented, spicy, sweet, sweet-smelling…” (Search on Thesaurus.com by “smelly” and “fragrant”). However, compare to smell, the adjective of sight is a lot more. So, how do people describe smell? Most of time we use objects we are familiar with or we tell an experience for example: “WET DOG” or “it smells like a spring rain”.

In Matthieu Louis’s “Bilateral olfactory sensory input enhances chemotaxis behavior.” project, the authors analysed the chemical components of oder, and then visualize the oder by showing the concentration of different odor sources . In the novel “Perfume”, Patrick Suskind’s words and sentence successfully evoked readers’ imagination of all kind of smells, and moreover, Tom Tykwer visualized them so well in the movie version that we can even feel like we are really smelling something.

The project “The Smell of…” is developed by processing with the controlP5 library, twitter API, and Flickr API. First, users will find a text field for typing in the thing they want to know the smell.

In this case we type in “my head”.

In this case we type in “my head”.

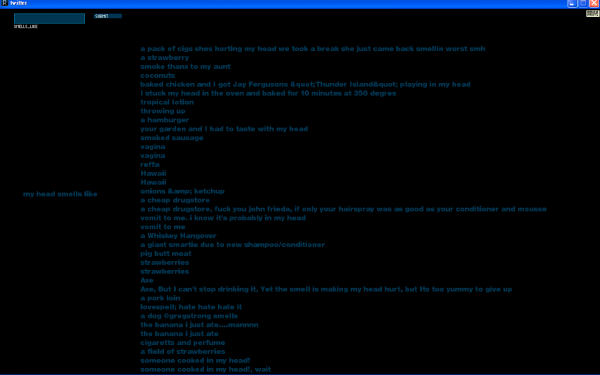

After submitted, the program started searching the terms “my head smells like” in twitter. Once received the data, we split the sentence after the world “like” and then cut the string after period ‘. ‘. So it came up with all the result:

Figure.2 the result of searching “my head smells like” from Twitter.com

Figure.2 the result of searching “my head smells like” from Twitter.com

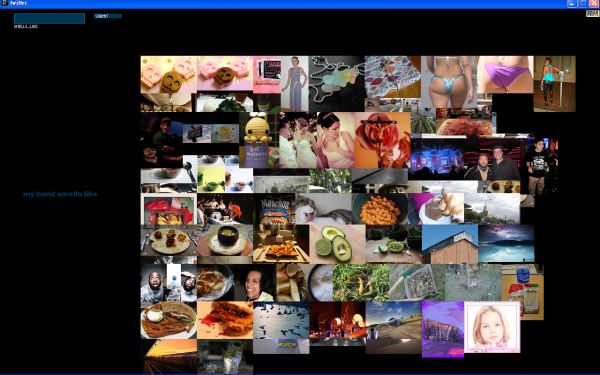

Third, the program used these words or sentences as the tag to find the image on Flickr.com::

Figure.3 The result of image set from flickr.com

So here is the image of “my head”.

For now I haven’t done any image processing so that all the pictures are raw. In the later version I would like to try averaging the color of every photos and presenting in a more organic form, like smoke or bubble. Also, the tweets we found are interesting so maybe we can keep the text in some way.

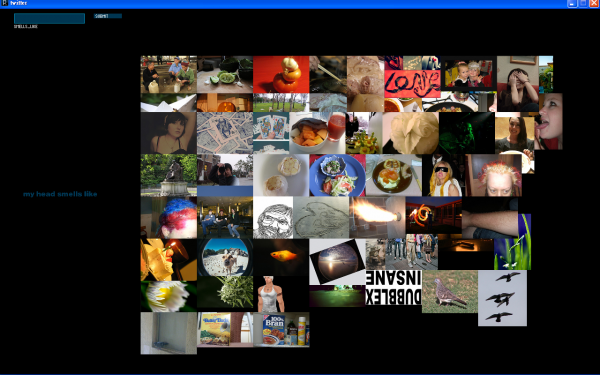

Figure.4:: The smell of “my hand”

Figure.4:: The smell of “my hand”

20*20 PPT20-20

Hi Kuanju, here are the group’s comments from the PiratePad from today’s crit:

—————————————

“Some kind of science.” I like that quote!

You might want to look into visualizing smells through neurological reaction (MRI, and other forms of digital radiology.) I know it’s not what you did but you might find it interesting. 🙂

I like the relationships this exposes. Roses smell like…a picture of cinnamon buns? A lot of strong technical work and synthesis clearly went into this as well; it pleases me as an engineer. A little more info about the source of each result might allow more fun exploration and conjecture. simple geographical info on the user perhaps? or an avatar photo? (“whose keyboard smells like chicken? and why?”) -SB

how did you determine to make sure the smell was actually linked to the word you typed. I’d love to see a more abstract visualization using the images.

I like the idea of bridging senses. I did a quick google found this page on synesthesia. Interesting: http://web.mit.edu/synesthesia/www/synesthesia.html

The interface is a little hard to see on the projector.

I agree, the text is a bit difficult to differentiate from the background.

would be cool to be able to see what the tweet was.

Smell nice choice, interesting. Why did not use google search instead of tweeter???

This is a good project, i like that it isnt about what an object actually smells like, but often what people are remarking that it smells like, and are possibly surprised by.

So what happens when you type in things like “envy smells like” and “beauty smells like” — intangible things that really don’t have a smell but that do have associations. Interesting project.

Yay, Processing+++! Yay, smells! “This is your nose, this is the input”–hahahahaha.

I think some of the data you got out of this is really, really funny. I like the use of Twitter and Flickr for your project (parsing–ambitious!). Maybe you could’ve used Google as well and take the first 50-ish results? However, I agree with some of the other comments, when you type in “head” and get out “Silly Putty” for one of the answers, you wonder what the relevance of this data is. Still, it is interesting. A little buggy (with using plural words), but that’s an easy code fix. Another suggestion: Maybe include filters–age and gender at least. I think including these filters would make your data more useful (perhaps to marketers especially, since our sense of smell is our second most keen sense: we remember through our sense of smell as well or better than we do visually). -Amanda

along the lines of what Golan said, some sort of intelligence in the sort that allows some visual hierarchy, so you can determine which images should be given more importance, makes it easier on the viewer than seeing a large amount of equal-heirarchy images. although personally i find the large array of images very engaging, I want to continue investigating the grid for new words