There are many great hack with kinect. When we can get the depth image from the camera, we can do a lot of interesting things with this data. At first, I just think that traditional input method is too bored to use, why not turn everyday things into input device. How can we make this? Then I found a video:

But I want to make a more customized user interface which user can use different objects to be the user interface. But then I found this is a very big project, I just make the touch part and made a very simple color picker application for it.

Then I began to built the environment.

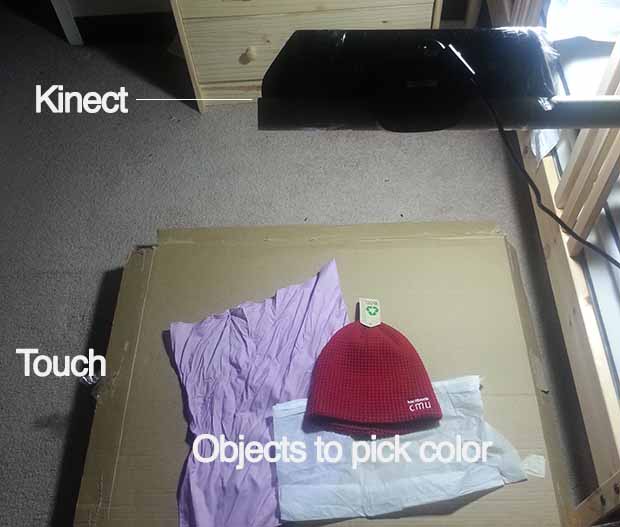

On the top, it’s the kinect fixed to about 70cm above the surface. The sruface is cardboard and on the surface there are objects to pick color.

This is the video for the project :

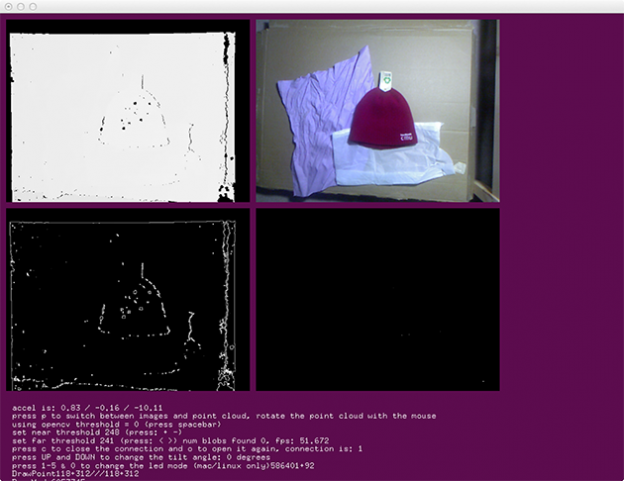

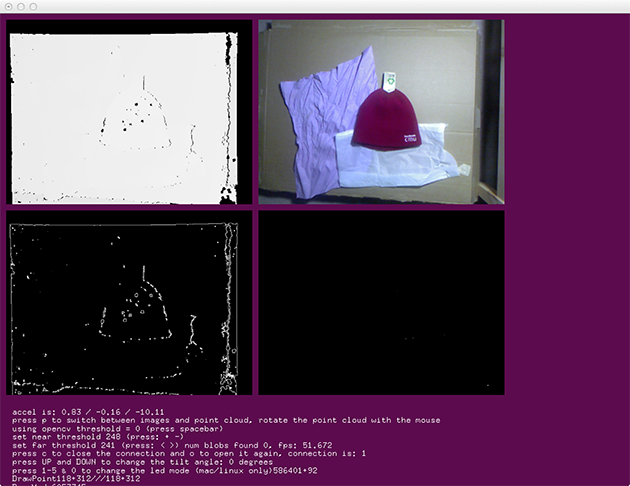

For touch part, it’s a little technical in Computer vision.

Firstly, I need to get the first 50 frames and get the average data of this. And it can be the background information for later use.

Secondly, when new things added into the kinect range, it will find the difference between background information and current frame. Also before find the difference, it will use some tech to reduce the noise in the current frame.

Thirdly, find the fingertip in some depth range(This would be different depend on how high the kienct from surface.) and get the fingertip’s location.

For colorpicker, I just use the location to get the color in RGB image which get from kinect RGB camera.

Maybe the final project I will continue to make something based on this.