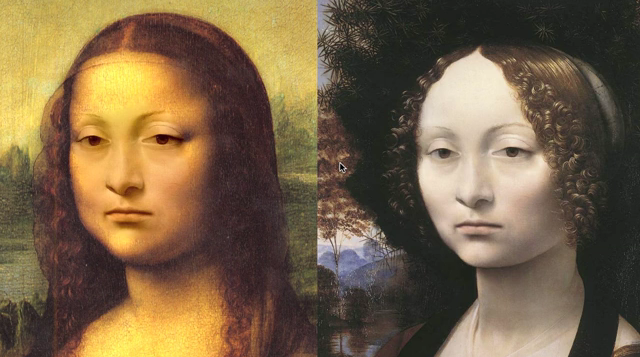

SeeStorm

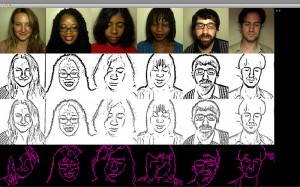

It can produce synthetic Video with 3D Talking Avatars by computer vision. Beyond plain real video, user can choose their look for each talk. User can generate Content (UGC) created from photo and voice. It’s a new mode of fun, personalization, visual communication. Voice-to-Video Transcoder converting voice into video. Platform for next-level revenue-generating services

Obvious Engineering

Obvious Engineering is a computer vision research, development and content creation company with a focus on surface and object recognition and tracking.

Our first product is the Obvious Engine, a vision-based augmented reality engine for games companies, retailers, developers and brands. The engine can track the natural features of a surface, which means you no longer have to use traditional markers and glyphs to position content and interaction within physical space.

The engine now works with a selection of 3D objects. It’s perfect for creating engaging, interactive experiences that blur the line between real and unreal. And there’s no need to modify existing objects – the object is the trigger.

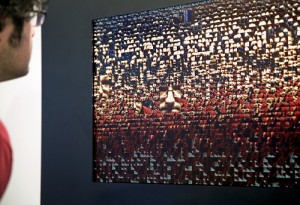

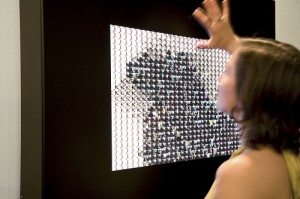

MultiTouch

MultiTouch technology identifies and responds to the movement of hands, while other multitouch techniques merely see points of contact. It’s a good way to put the computer vision into the multitouch screen.