For my 2nd to last project, I built a somewhat crude drawing application using Synapse, a Kinect and OpenFrameworks. For my final project, I Intend to expand on this tool too make it (a) better and (b) more kick ass. I’m still piecing together the interaction and implementation details, but for now, here’s some nice prior art.

John

06 Mar 2013

Fuck You Asshole

Okay, Terminator is not really a piece of art utilizing computer vision, but I still think it’s relevant because film was/is one of the primary vectors through which people think about machines that see/comprehend/respond to their environments. The seminal machine here is probably HAL 9000 from 2001: A Space Odyssey, but Terminators 1 & 2, Robocop and others are heavy hitters in the collective consciousness vis-a-vis computer vision. Basically, everything I know about computers and the millenium I learned from Hollywood.

Computer Vision for Personal Defense

Okay, again not art really, but nevertheless relevant to the discussion. The above video provides a demo about an automated water gun built with OpenCV and Python. What are the implications of technologies wrt the wholesale slaughter of humans by machines. First they came for the squirrels and I didn’t speak…

Minecraft Hack w/ Kinect

Because awesome, that’s why.

Nathan

06 Mar 2013

Idea 1

So for my project I want to create a game about smooshing and competition. What I am contemplating is a tanglible controller that consists of 2 buttons that you will hit when prompted. It is such a simple ‘button’ based game and I think the buttons will flank your computer (so you can hit with both hands and have to look back and forth). The game would be played in groups of people and the most fun part of the game is that there is a display (probs LCD or something) and will project your score based on speed and accuracy.

I love to encourage social interaction via games because I think we lost something with local gaming along the way.

Idea 2 (maybe 1?)

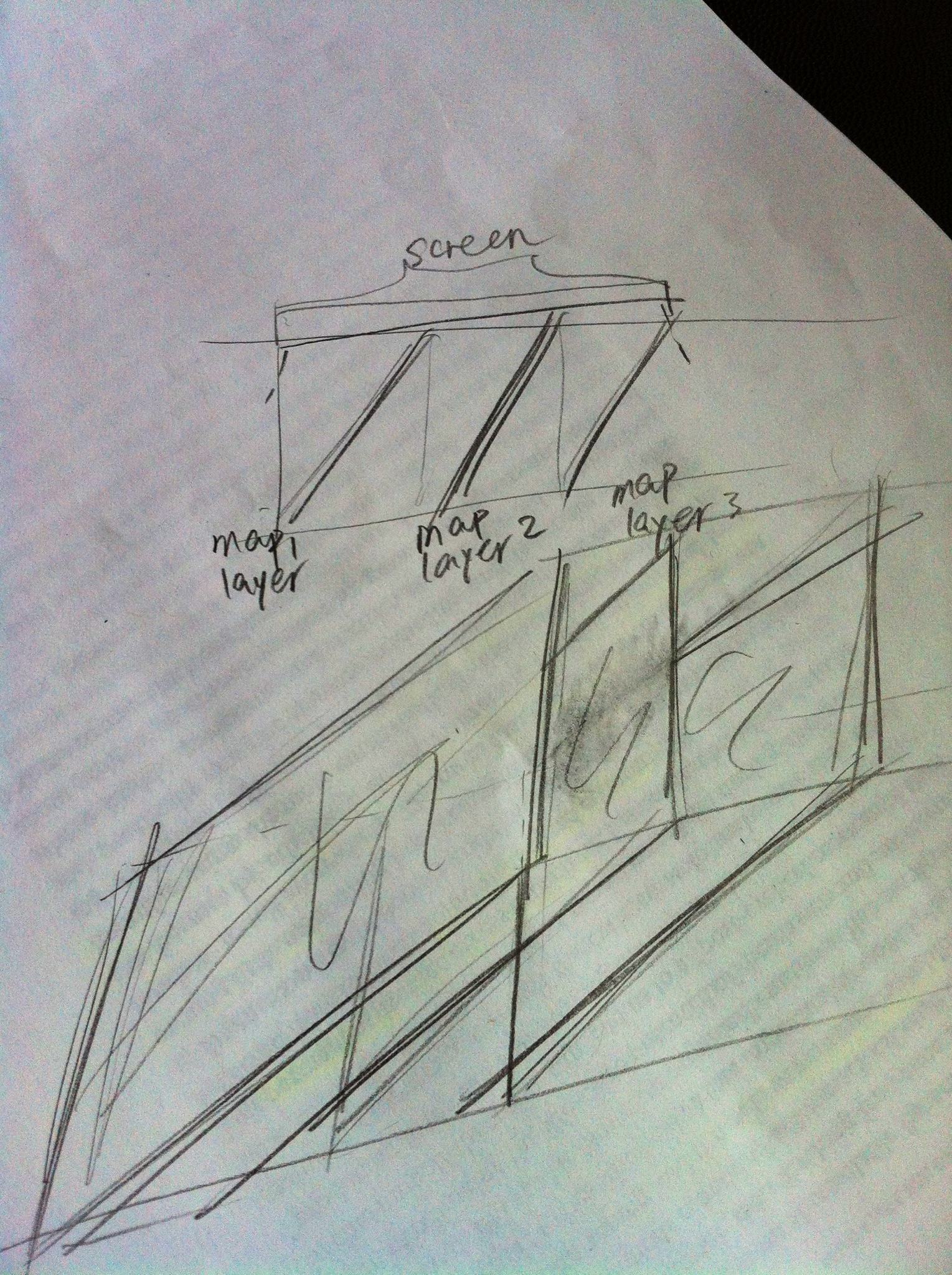

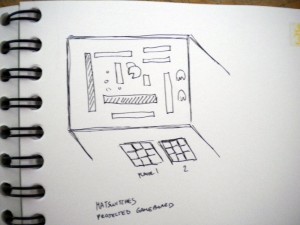

My original idea was an interactive board game. I had no real concept for the game other than the fact that I wanted to make a board game more “digital and interactive”. I have been making board games for about 5 years now and I really love the idea of building and making a place for people to interact. This game was going to be more Betrayal at House on the Hill or Level 7 in style where every person controls a certain character and you interact with an environment that is the game board.

Meng

06 Mar 2013

Joshua

06 Mar 2013

I am interested in using the kinect to manipulate 3d meshes or surfaces in a program like rhinoceros or blender. There are plenty of motion capture projects out there, but I am more interested in taking hand gestures and mapping them to various 3d modeling commands. The leap motion might be much more suited to this, but because I have never done anything involving hand tracking, perhaps a good first step would be using the kinect.

I think it would be interesting to track fingertips in 3d space and connect them with a curve in the modeling environment. As the hand moved around it would sweep out a surface. I want to computationally alter that surface according to the velocity or even acceleration of the points. The surface could become more convoluted, spikey or maybe perforated. A sculpture made from this 3d model would therefore contain not only visual information about the path of the fingertips, but also about the motion (velocity and accel.)

kind of like this (but hopefully smoother, and computationally altered):

Of course I also just want to continue working on the truss genetic algorithm.

Yvonne

06 Mar 2013

My idea is to project a game onto a wall (probably my version of PacMan). On the wall will be elements a user can rearrange to create different maze configurations. A camera (maybe a Kinect) would be used to sense the objects in space and generate a 2d collision map. Mat switches on the ground will be used to control the projected character and move it through the physical maze.

Elwin

06 Mar 2013

I have a couple of random ideas for this project. Not sure which one I should do yet.

Face-away

This idea doesn’t really have a purpose. It’s more experimental and artsy I guess. Imagine a panel sticking out of the wall that can rotate on the x- and y-axis. The panel reacts to a person and will rotate away from the person’s head, facing away from the user. For example, if the user goes to the right, the panel will rotate to the left on the y-axis. The panel rotates the other way around if the user goes to the left. Same inverse movement will occur when the user tries to look at the panel from above or below.

**sketch image coming very very soon**

Possible implementation:

– I could either use a webcam or Kinect to track the user. I think the most important part is the ability to track the location of a person’s head. If I use the Kinect, I should be able to get the head position from the skeleton (I’ve never worked with the Kinect). I could also use blob detection with the webcam from a top-down view or something, but I don’t think it would be accurate enough. Perhaps a better and easier method would be to use FaceOSC to track the head. I would have to place the camera in such a way that I would be able to see and capture the face from all angles.

– For rotating the panel I could use 2 servo or stepper motors; 1 for each axis. These shouldn’t be hard to implement.

???

For now there’s nothing to see on the panel. Could be just a plain piece of material, wood, acrylic or something. But I’m not sure if that’s interesting enough or if I should come up with something to display on the panel.

Bueno

06 Mar 2013

So, Caroline and I have decided to collaborate on a project together.

A few inspirations and references we hope to draw from for this:

- Here is an awesome article about how virtual reality does and does not create presence.

- Virtual presence through a map interface. Click

- making sense of maps. Click

- Obsessively documenting where you are and what you are doing. Surveillance. Click

- Gandhi in second life. click.

- Camille Utterback’s Liquid Time.

- Love of listening to other people’s stories. click

- Archiving virtual worlds

A few artistic photographs of decayed places:

Our thoughts concerning all this surrounded Carnegie Mellon and “making your mark”. People really just past through places, and there is a kind of nostalgia in the observance of that fact. Furthermore, what “scrubs” places of our presence is just other people. There is a fantastic efficiency in the way human beings repurpose/re-experience space so that it becomes personalized for them. We conceived our project as having people give monologues and stories that can be represented geographically, using Google maps. Their act of retelling would also be a retracing of their route.

Some technically helpful links:

- Guy who used street view and has code on git hub: http://notlion.github.com/streetview-stereographic/#o=-.448,.479,-.054,.753&z=1.361&mz=18&p=23.65276,119.51718

- Acii art street view: http://tllabs.io/asciistreetview/

- Sound scrubbing library for processing.

Here is a video sketch I did of me “walking” through a little personal story of my car incident on Google maps. This example video I made is of really crappy quality, so I apologize in advance. I was making it in kind of a rush and didn’t have time to figure out proper compression. I will upload a better version later.

Sequence 01 from Andrew Bueno on Vimeo.

Caroline

06 Mar 2013

Inspiration/ References:

- Here is an awesome article about how virtual reality does and does not create presence.

- Virtual presence through a map interface. Click

- making sense of maps. Click

- Obsessively documenting where you are and what you are doing. Surveillance. Click

- Gandhi in second life. click.

- Camille Utterback’s Liquid Time.

- (Bueno) Ah, could you imagine what it would be like to have a video recording spanning years?

- Love of listening to other people’s stories. click

- (Bueno) Archiving virtual worlds

Thoughts:

- carnegie Mellon and “making your mark”. People really just past through places and I think there is a kind of nostalgia in that.

- (Bueno) I think an addendum to that thought is that what “scrubs” places of our presence really is just other people. Sure, nature reclaims everything eventually but there is a fantastic efficiency in the way human beings repurpose/re-experience space.

- artistic photos of old places

Technically Helpful:

- Guy who used street view and has code on git hub: http://notlion.github.com/streetview-stereographic/#o=-.448,.479,-.054,.753&z=1.361&mz=18&p=23.65276,119.51718

- Acii art street view: http://tllabs.io/asciistreetview/

Sound scrubbing library for processing.

Andy

06 Mar 2013

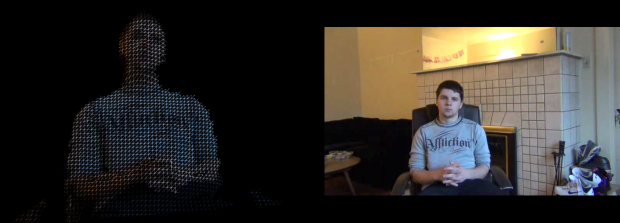

So for better or for worse, I think one of my greatest sources of inspiration is to learn how to use a new tool and then demonstrate proficiency with it. For my current idea of the interaction project, perhaps two tools. I guess my basic idea is pretty simple – I want to take real objects and put them into video games. Below is my first attempt to do so – a depth map of my body sitting in chair which I was able to import into Unity via the RGBD system.

Here is the image in the intermediate steps, perhaps you can see it better this way:

So we are a long way away from good-looking representation, much less the possibility of recombining depth maps to get true 3d objects in Unity (an idea), but I really want to become more proficient in Unity as well as spending some time with RGBD, so I like the idea of playing around and seeing what I can make possible in a space which is (to my knowledge) very unexplored.

WHERE IS THE ART?

Definitely a question on my mind. The super-cool idea I have is to use RGBD and augmented reality to allow me to create levels for a video game with the objects around my house, recording the 3D surfaces and assigning spawn points/enemy locations/other stuff based on AR symbols which I can put in the scene. The result could be this hopefully cool and creative hybridization of tabletop and video games, allowing users to create their worlds and then play them.

I’m also curious to see who shoots the first sex tape in RGBD, but I don’t think I want to be that guy.