I hope you’ll pardon me for doing work that I’m already familiar with and may not be particularly categorized as art… I’ve been in New York since Monday morning, and unfortunately Megabus’s promise of even infrequently functional internet is a pack of lies. My bus gets in at midnight, so to avoid having to stay up too late and risk sleeping through my alarms, I’m doing the draft mostly from memory and correcting later.

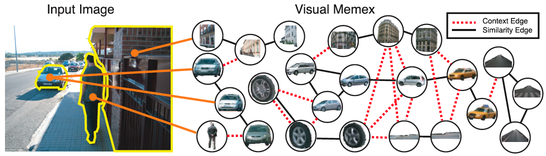

Professor Efros is tackling a variety of research projects that address the fact that we have an immense amount of data at our fingertips in the form of publicly-available images on flickr and other sites, but relatively few ways of powerfully using it. What I find unique about the main thrust of the research is that it acknowledges that categorization (which is very common in computer vision) is not a goal in and of itself, but is just one simple method for knowledge transfer. Thus, instead of asking the question “what is this,” we may wish to ask “what is it like or associated with.” For example, it is very easy to detect “coffee mugs” if you assume a toy world where every mug is identical in shape and color. It is somewhat more difficult to identify coffee mugs if the images contain both the gallon-size vessels you can get from 7-11 and the little ones they use in Taza d’Oro and the weird handmade thing your kid brings home from pottery class. It is more difficult still to actually associate a coffee mug with coffee itself. In general, I’m attracted to Professor Efros’s work because it gets its power from using the massive amount of data available in publicly-sourced images, and is built upon a variety of well-known image processing techniques.

This may not be a fair example, but I want to share it for those who aren’t familiar. GigaPan is a project out of the CREATE Lab that consists of a robotic pan-tilt camera mount and intelligent stitching software that allows anyone to capture panoramic images with multiple-gigapixel resolution. The camera mount itself is relatively low-cost, and will work wth practically any small digital camera with a shutter button. The stitching software is advanced, but the user interface is basic enough that almost anyone is capable of using it. We send these all over the globe and are constantly surprised by the new and unique ways that people find to use them, from teachers in Uganda capturing images of their classroom to paleontologists in Pittsburgh capturing high-resolution macro-panoramas of fossils from the Museum of Natural History. I appreciate this project because at its core, the software is simply a very effective and efficient stitching algorithm packaged with a clever piece of hardware, but it gets its magic from the way in which it is applied to allow people to share their environment and culture.

Google Goggles is an Android app that allows the user to take a picture of an object in order to perform a Google search. It is unclear to me how much of the computer vision is performed on the phone and how much is performed on Google’s servers, but it is my impression that must of it is done on the phone itself. At the very least, some of the techniques employed involve feature classification and OCR for text analysis. The app does not seem to have found widespread use, but I still find it an interesting direction for the future because it could make QR codes obsolete. Part of me hates to rag on QR codes, because I’ve seen them used cleverly, but I feel like most of the time they simply serve as bait because whenever people see QR codes, they want to scan them regardless of the content,, because people love to flex their technology for technology’s sake. I think Google Goggles might be a case where people will use it more naturally than QR codes, since in some instances it is simply easier to search short text rather than taking pictures.