Sifteo Cube Gigaviewer

This one’s fairly straightforward. I really liked what I did with the Sifteo cubes in Project 1, and I’d like to expand it so that the Sifteo cubes can actually be used to explore very high resolution images from a sort of ant-on-a-page perspective. I’ve already got some code that I’ve made since project 1 that auto-chops images into nice Sifteo-sized bits and then rewrites the LUA file accordingly. This project would involve packaging all of that up and ideally using the (up and coming) Sifteo USB connection to upload new high-resolution images daily. This way, the cubes could be an auto-updating installation in a classroom or gallery.

Here are the parts of the project from the image to the cubes (and my classification of each)

1. Get the newest image from a dropbox or git repository (Probably trivial)

2. Write processing script to chop images and autogenerate a LUA script (Pretty much done)

3. Regularly run the processing script, re-compile, and re-upload to the Sifteo base (Maybe not too hard)

4. Figure out how to rotate images (Should be easy… need to talk to Sifteo people)

5. Devise a scheme for managing asset groups better on the limited cube resources (tough but interesting)

6. Devise a scheme to predict which asset group will be needed next and load in a timely manner to keep the interaction smooth (Hard but very interesting and possibly publishable)

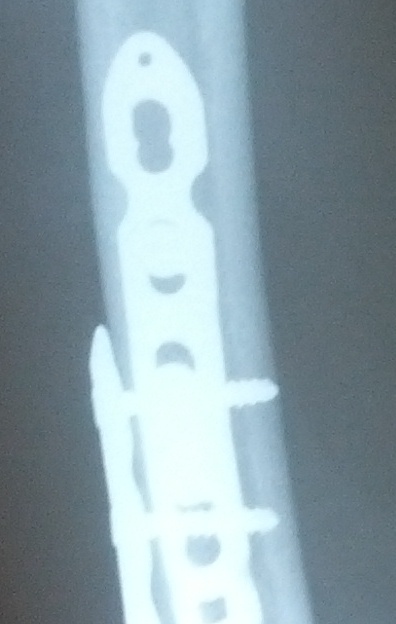

I think there’s some really cool potential here for having to piece together the “big picture” through little windows to understand what you’re looking at. For example what’s this?

Scroll down!

It’s my arm!