This was the one part of this assignment I had most trouble with! Although I found the Sifteo docs to be only minorly helpful in this assignment, I should have for seen this and started earlier.

I began this assignment thinking I would do something like a word game for the blind. The blocks would each represent a letter. When tapped on the block would say what the letter was. If the letters were arranged from left to right in order, then a affirmative sound would be played, and the next word would be auto scrambled.

I was able to get the word split into letters and divided among cubes, but when it came time for me to see if the user had spelt the word out correctly I ran into a problem where I was not understanding how to reference each cube individually. This lack of understanding caused me to spend a bunch of time looking over the SDK website, which had few answers.

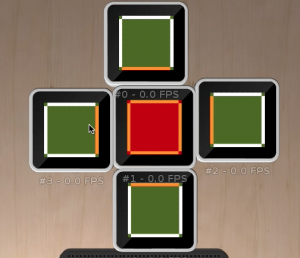

Ultimately, given the time, I decided to scratch the initial idea for a simpler one that was closer to an example I fully understood – the sensor’s example. I decided to make use of screen colors and assign these based on cube adjacencies. At first I had some problems with the background colors, but managed to set these in the end.

For a bit of extra interaction I decided to make use of touch. When a cube is touched, it changes the entire color scheme, while maintaining the fact that the color coding is based on adjacencies.

Overall the effect looks like it would be fun for a baby or a cat.