This is a collaboration work with Can (John) Ozbay.

For details please click here.

Z.

I combined ofxImageExportQueue and ofxWorkQueues. They go together, as in ofxImageExportQueue needs ofxWorksQueues in order to function. I adapted imageSequenceExample to get the .gif file working and the videoGrabberExample to set up the webcam. What the addons do is that it takes a series of screenshots when the key ‘t’ is pressed. The photo files are then sent to the data folder inside the bin folder of this specific app. The concept to this openFrameworks project is that the webcam will take screenshots of a person’s reaction when watching the mindblown.gif. I thought it would be funny to see different reactions, and to keep the files in the photo format rather than a video.

Here’s the code: https://github.com/pattvira/ofxAddons_UpkitIntensive

FaceOSC+Processing from kaikai on Vimeo.

This is my faceOSC+Processing experiment.

What I did was that I mapped the mouthWidth and mouthHeight values got from the FaceOSC to RGB values in Processing to draw on canvas. The shape is exactly how your mouth is, and the color changes according to your mouth open-close motion. So basically what I did was turning the mouth into a paint brush and the drawing doesn’t look too bad. :)

Text Rain, or Chinese Snow.

Finished in Processing. Having captured the video from the camera, I used brightness to distinguish human/objects and background. The characters will fall down as long the brightness of the pixel postion is bigger than a value.

Here’s code link: https://github.com/mengs/qiangJingJiu

PS:

There will be very English rainy, so I chose one of my favorite Chinese Poem by Li bai.

I am honestly ashamed to post this homework, but I respect deadline, so here it goes. I tried working with many addons, including fancy deluxe package such as ofxTimeline and etc. Somehow, I wasn’t compiling them right. And every when they compile, they wouldn’t speak to one another. This example is a super early work in progress. I’d like to draw a shape, triangulate it, and animate the mesh via ofxAnimate. So far, I’ve only got to draw, animate the outline and have two rectangles come back to the mouse (and even that’s an accidental hack). The app crashes where the drawings are clustered. https://github.com/tchoi8/IACD/tree/master/Animatable_Triangle_2

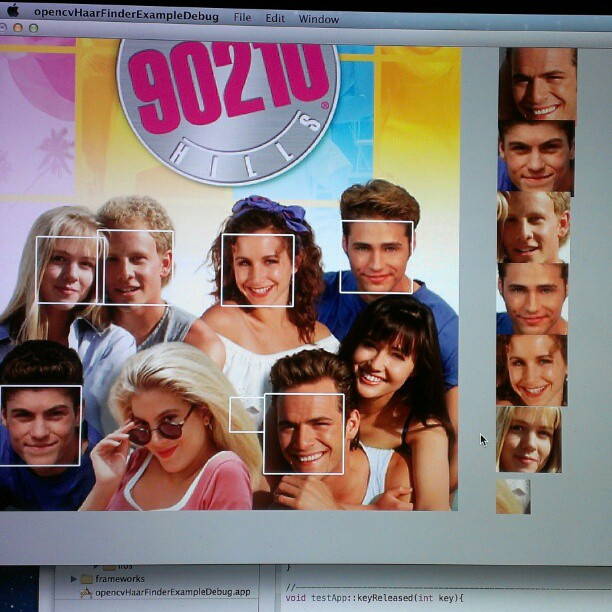

Another app that I was working on was using opencvHaarFinder to detect face and make a playing card via exporting to pdf. I got only as far as finding faces and laying them out on the side. Will work on this, and also test with ofFaceOSC and ofTLDdetector as they might be better algorithm. https://github.com/tchoi8/IACD/tree/master/opencvHaarFinderExample2

It can be tough sometimes choosing what you want to eat. Fret no more, I’ve found a solution. I took several recipes and photos of the food from the web. I used FaceOSC to create a program that randomly choose a meal and how to make it. I programed so that the food gets randomized when the eyebrows are raised, and stop when they are at the normal position. However, I (unintentionally) found out later that blinking would also work as well. Once you are filled with joy because you are satisfied with the food the program has chosen for you, you smile and the recipe of that specific food will appear. You have to keep smiling though in order to read the recipes. (How else would the program know how happy you are with the choice being made for ya!?)

Here’s the code: https://github.com/pattvira/faceOSC_food

Sources for recipes: www.smittenkitchen.com

This was a really fun an learning experience. At first it was a bit frustrating to figure out where to start. However after insightful instructions from Golan, everything was pretty smooth. The code is pretty basic, and it can use much more work. Basicly it’s checking video feed for difference between threshold value and each pixels.If it is darker than the threshold value, the character will jump up (ascend) and if not, it will fall down. I wanted to make the characters rotate when they bounce up, and also to be able to move text from right to left and back, but didn’t get there yet.

Second video has an epic fail near the end.

Github https://github.com/tchoi8/IACD/tree/master/TextRain

PFont f;

String message = ":-@ :-> K@))(*#dfghj:-& k:-::::P:-*&**@!!!!~~®-P*#dfghj:-& k:-::::P:-*&*#dfghj:-& k:-::::P:-*&lL";

// An array of Letter objects

Letter[] letters;

//char[] letters;

import processing.video.*;

int numPixels;

Capture video;

int m;

int n;

int threshold = 95;

int index = 0;

void setup() {

size(640, 480, P2D); // Change size to 320 x 240 if too slow at 640 x 480

strokeWeight(5);

// Uses the default video input, see the reference if this causes an error

video = new Capture(this, width, height);

video.start();

numPixels = video.width * video.height;

noCursor();

smooth();

f = createFont("Arial", 25, true);

textFont(f);

letters = new Letter[message.length()];

// Initialize Letters at the correct x location

int x = 8;

for (int i = 0; i < message.length(); i++) {

letters[i] = new Letter(x, 200, message.charAt(i));

x += 10;

//x += textWidth(message.charAt(i));

}

}

void draw() {

if (video.available()) {

video.read();

//video.filter(GRAY);

image(video, 0, 0);

video.loadPixels();

float pixelBrightness; // Declare variable to store a pixel's color

for (int i = 0; i < letters.length; i++) { letters[i].display(); if (mousePressed) { letters[i].goup(); // letters[i].display(); } else { letters[i].shake(); letters[i].check(); } } //background(51, 0, 0); updatePixels(); } } class Letter { char letter; // The object knows its original "home" location float homex, homey; // As well as its current location float x, y; Letter (float x_, float y_, char letter_) { homex = x = x_; homey = y = y_; letter = letter_; } // Display the letter void display() { //fill(0, 102, 153, 204); fill(223, 22, 22); textAlign(LEFT); text(letter, x, y); //x+=10; } // Move the letter randomly void shake() { int m, n; m = int(x); n= int(y); int testValue= get(m, n); float testBrightness = brightness(testValue) ; if (testBrightness > threshold) {

x += random(-1, 1);

y += random(5, 10);

if (y > 400) {

y = -height;

index = (index + 1) % letters.length;

}

}

else {

x -= random(-1, 1);

y -= random(20, 26);

}

}

void check() {

float q= textWidth(message);

if (y> 460) {

y = 30;

index = (index +1)%letters.length;

fill(323, 22, 22);

noStroke();

// rect(14, 34, 45, 45);

}

else if (y<30) {

y = 60;

index = (index +1)%letters.length;

fill(223, 22, 22);

noStroke();

// rect(10, 30, 40, 40);

}

else {

// fill(123, 22, 22);

noStroke();

// rect(10, 30, 40, 40);

}

}

void goup() {

x -= random(-2, 2);

y -= random(10, 15);

}

// Return the letter home

void home() {

x = homex;

y = homey;

}

}

Here is my reimplementation of Text Rain by Camille Utterback and Romy Achituv. This is my first time working with Processing, and learning about object-oriented programming. I created a class called Letter that contains the object’s data and methods. Once I got the letters to fall, I then worked to connect the program to the webcam by importing a video library. The text rain program compares the brightness of the pixel of each letter to a threshold. If the brightness is more than the threshold, the letter rises up. If not, it falls down.

Here’s the code: https://github.com/pattvira/textRain

I’m still finding my way around OpenFrameworks and also XCode which feels clunky and extremely demanding (why does it keep chaning my directory view when I compile?) At the same time the benefits of OF over Processing wrt computational capability are pretty obvious even now.

I’m still finding my way around OpenFrameworks and also XCode which feels clunky and extremely demanding (why does it keep chaning my directory view when I compile?) At the same time the benefits of OF over Processing wrt computational capability are pretty obvious even now.

My demo is a combination of the openCV addon which comes included and the ofDrawnetic addon. The two don’t interrelate at all, they simply share the screen. Just for the record, ofDrawnetic has some really top notch examples.

Here’s the repo: https://github.com/johngruen/camera_draw

ofxaddons from john gruen on Vimeo.

For my faceOSC implementation i utilized Processing and Dan Wilcox’ Face class. My sketch is a 3D Robot who can be controlled by facial rotation along the x,y, and z axis and by scaling along the z-axis. The robots eyes are semi-independently articulated by the eyeBrow properties of the face object. Additionally, the robot’s eyes glow red when the user opens their mouth fully. See the video below for a working demo.

Gihub Repo: https://github.com/johngruen/roberto

fosc from john gruen on Vimeo.