Computer Vision

Reconstructing Rome

Paper for those interested: http://www.cs.cornell.edu/~snavely/publications/papers/ieee_computer_rome.pdf

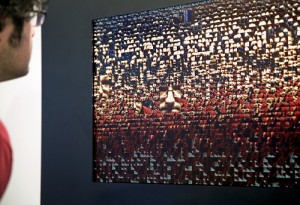

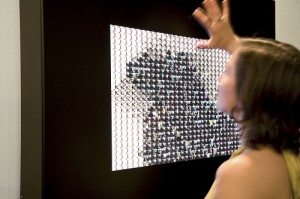

This was reccomended by a friend of mine who is really into CV. This project is amazing – it takes photos from social image sharing sites like flickr and uses the images with their geolocation to recreate a 3-D image of Rome’s monuments. Crowdsourcing 3-D imaging. Amazing.

Faceoff/FaceAPI

FaceAPI is an API developed to track head motion using your computer. This is not too far off from what we have seen with FaceOSC. The part that is cool is the fat that this API is integrated into the source engine. Games developed with the Half-Life 2 engine can utilize this API to provide head-based gesture control for games. This can be anything from creating a realistic 3-D feedback to zooming in when closer to the screen. The video goes over this in detail. I simply like the idea of using head interaction in gaming since webcams are so commonplace now.

Battlefield Simulator

https://www.youtube.com/watch?v=eg8Bh5iI2WY

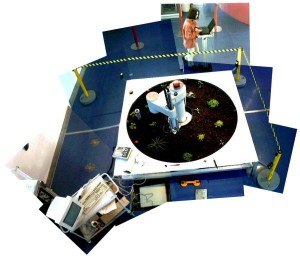

Immersive gaming to the MAX. This is byfar the most impressing game-controllers/reality simulators I have seen. It really engages your entire body. I won’t be able to explain everything, but basically CV is used here to map pixels onto the dome based on the users body position. Also body gestures are detected via Kinect to trigger game events. On top of that the game is constantly being scanned for detection of the player getting hurt. When the player is hurt in-game, paintballs are triggered to shoot making the pain a reality. Check out the video – its long, but worth it.