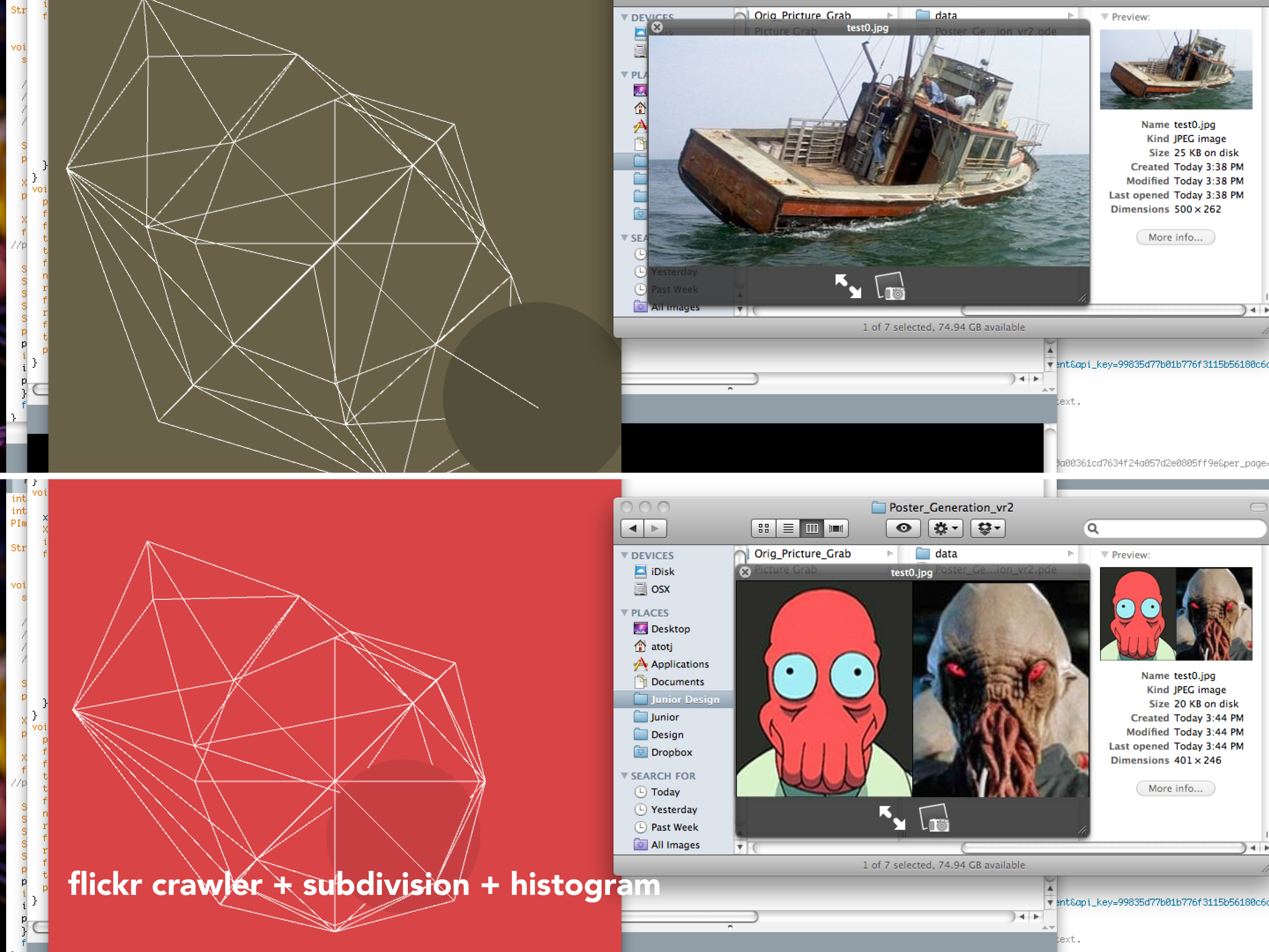

Project 1: Flickr Scrape + Face Averaging

For the first “real” project, I initially had a bit of trouble deciding what kinds of data I wanted to obtain. Initially I was pretty set on using Twitter and the Twitter 4j library for Processing. I was entranced by the thought of tapping into the real-time thoughts of Twitter users of Pittsburgh in relationship to the weather. I planned on creating a gradiation of color swatches by taking a photograph of the sky, averaging the color, and relating this color to the tweets during this time exploring the possibility that perhaps cloud breaks or the rare rays of sunshine would drastically affect the mood of local Pittsburghers. However, my idea lacked depth and the topic of weather was pretty much off limits for this project.

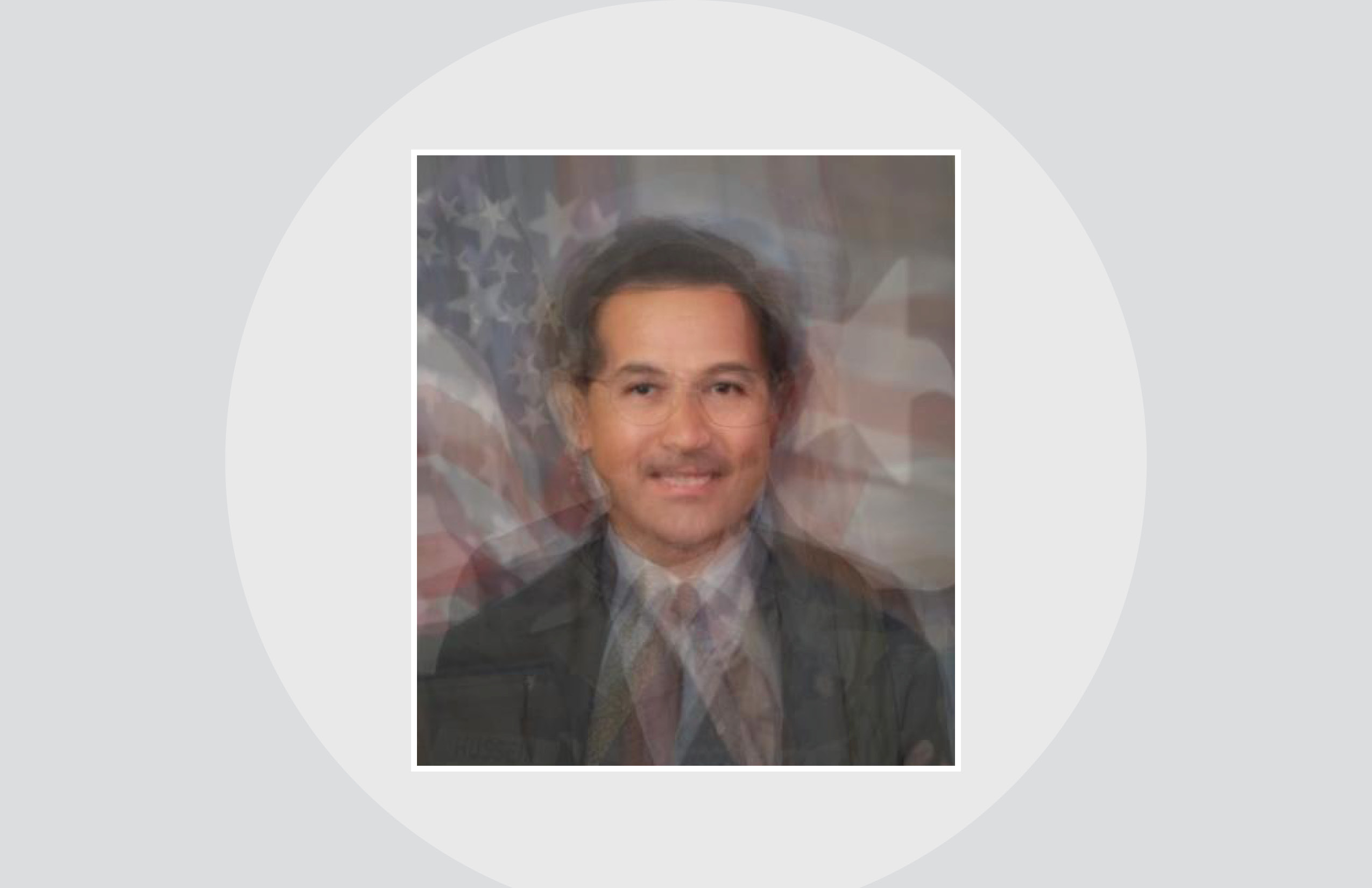

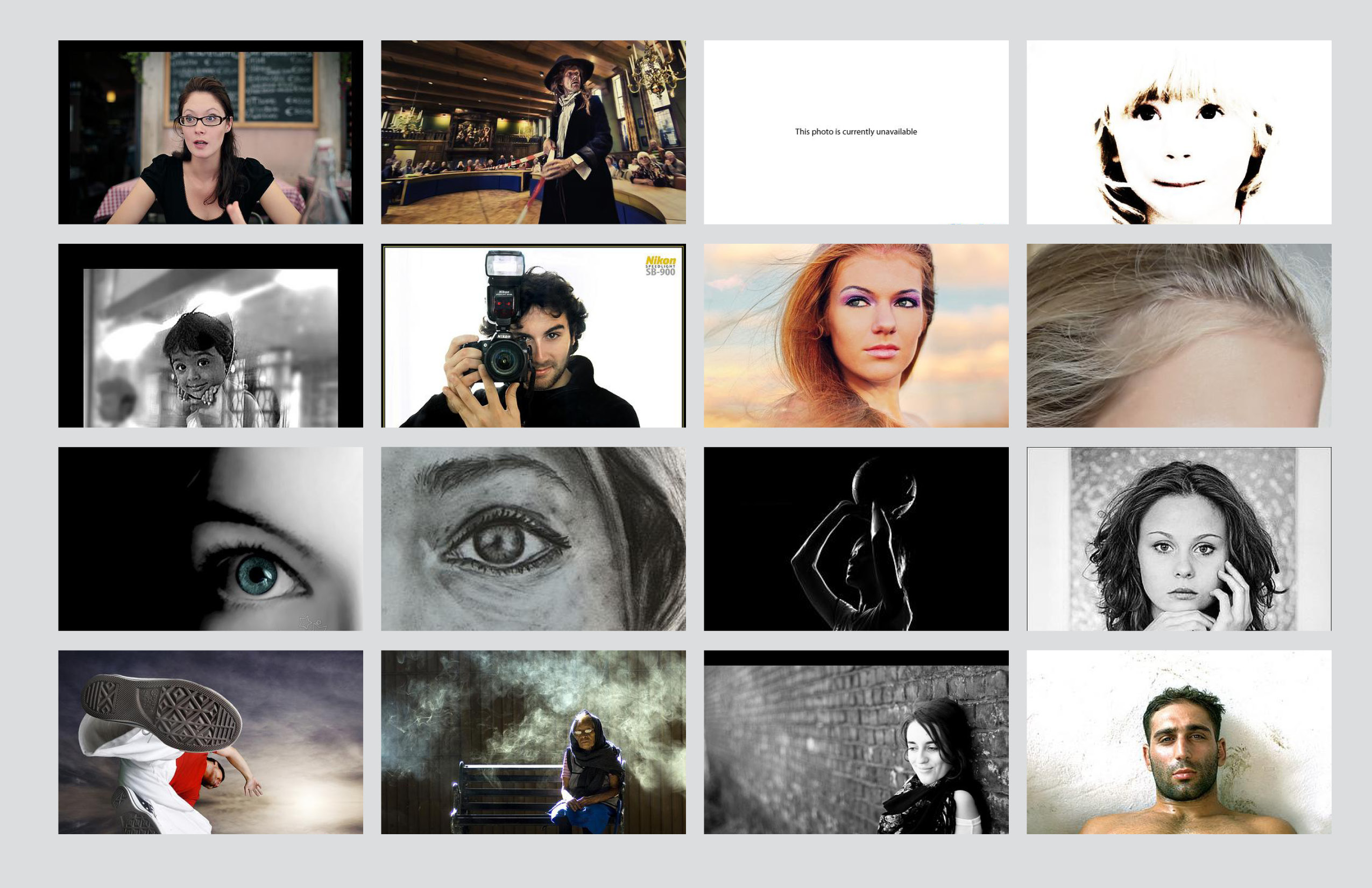

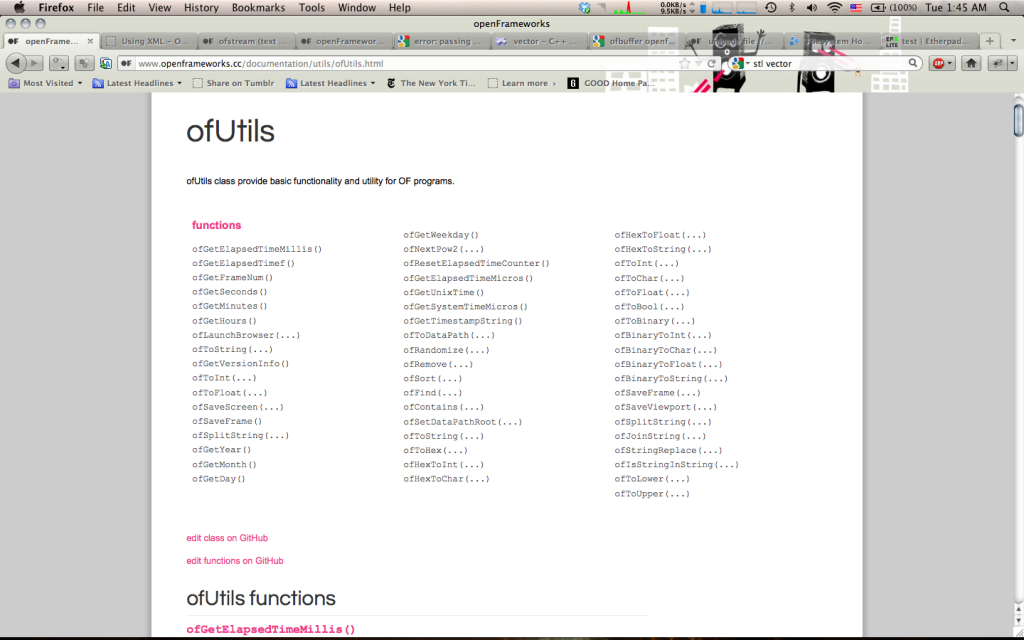

Therefore, I sought to explore another form of data visualization: face averaging. From the very beginning, I was somewhat obsessed with the concept of using averaging as a means of abstracting data whether it be the color of the sky or people’s faces. As my final idea, I sought to plug ambiguous words such as “success” and “money” into Flickr, scrape the resulting images, toss the images through Face Tracker in OpenFrameworks, and finally average the faces in MatLab.

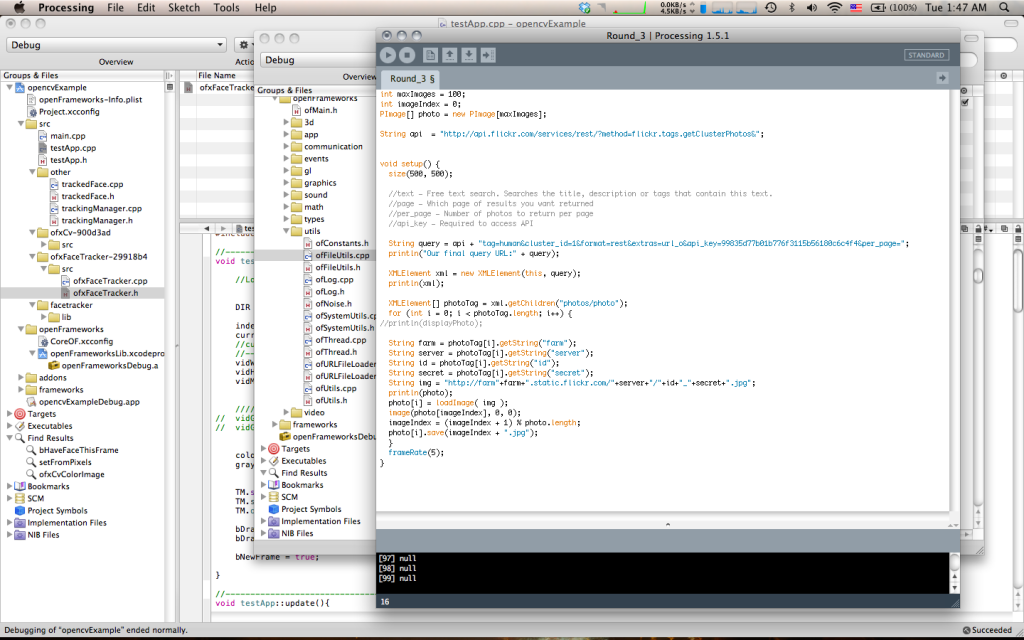

I’ll admit, for a novice Processing user, diving into OpenFrameworks, Face Tracker, and Flickr API simultaneously was quite daunting. I knew what I wanted to do and the general means of acheiving the goal, but the details escaped me. I had never really investigated the C++ programming language before and I wasn’t really sure how to implement the Flickr API.

Regardless I dove head first, writing (or rather copying) a Flickr scraper from the Processing forums.

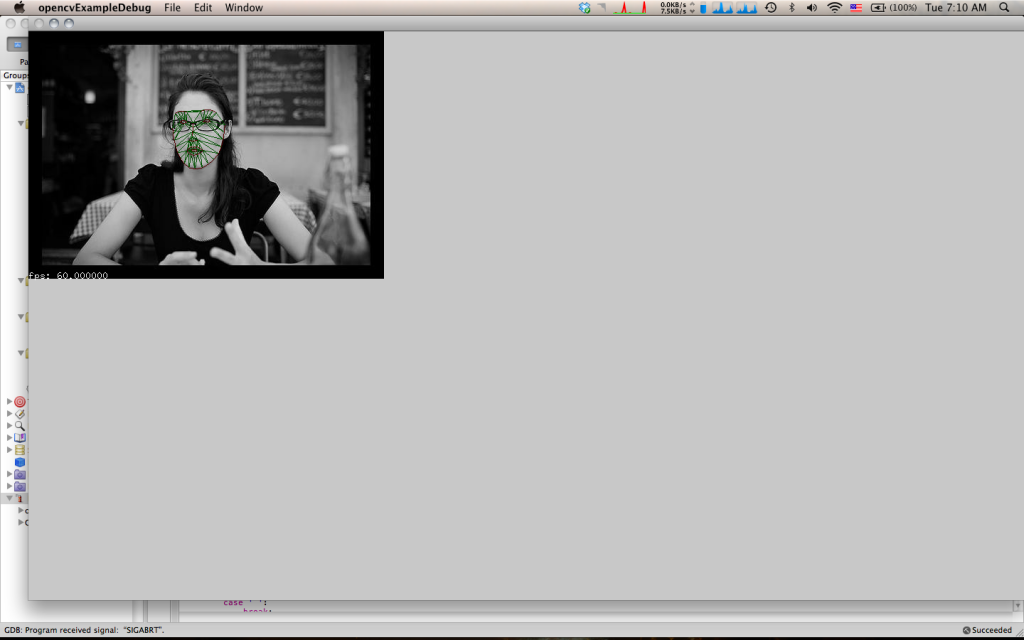

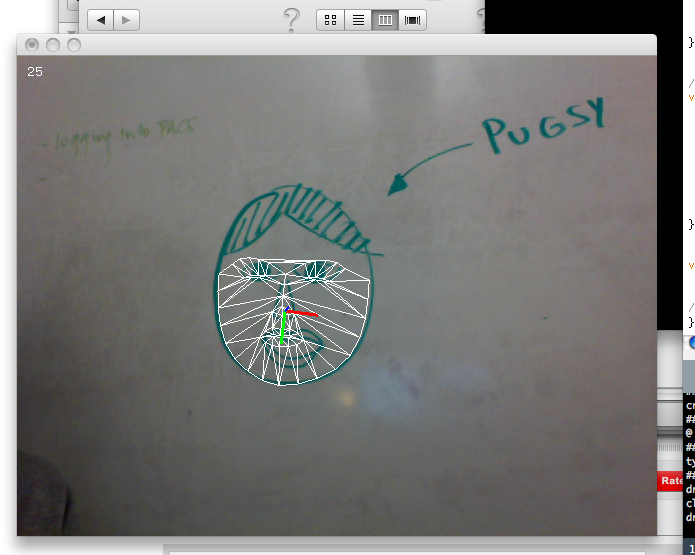

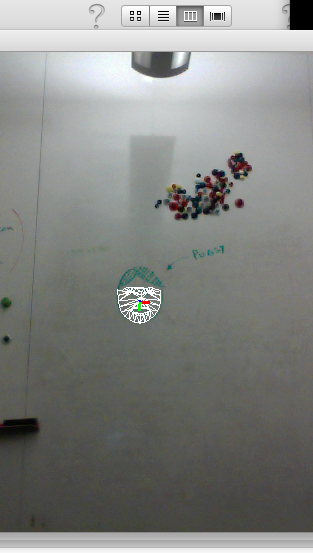

Once with my images, I headed into OpenFrameworks and immediately running into headaches with adding Addons in XCode 3 (which Max Hawkins assured me would have disappeared had I already installed XCode 4). Anyways logically, I knew I had to interrupt the video feed into Face Tracker with my own images, find where the points were being drawn and store those points in a .txt I could access later. It’s a lot easier than it sounds.

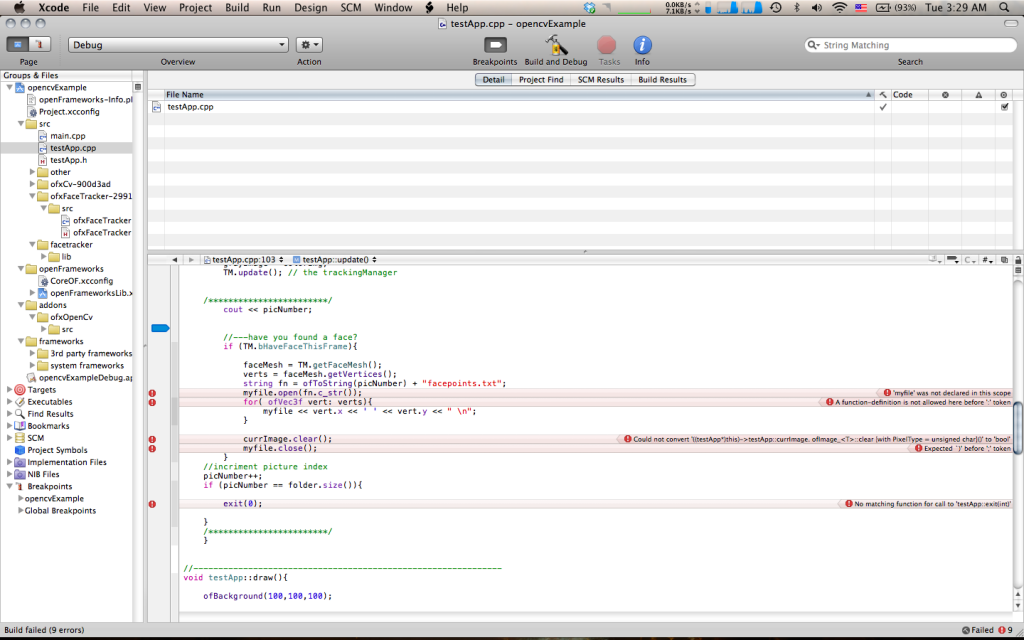

Every error that would appear, I would tried to decode it by either copy and pasting it into Google only to be greeted with more mumbo jumbo I didn’t understand, or I would constantly pester Alex Wolfe to lend me a helping hand (which graciously she did). Mostly, however, I struggled on my own as I really desired to at least understand the basic foundation of C++ and Openframworks.

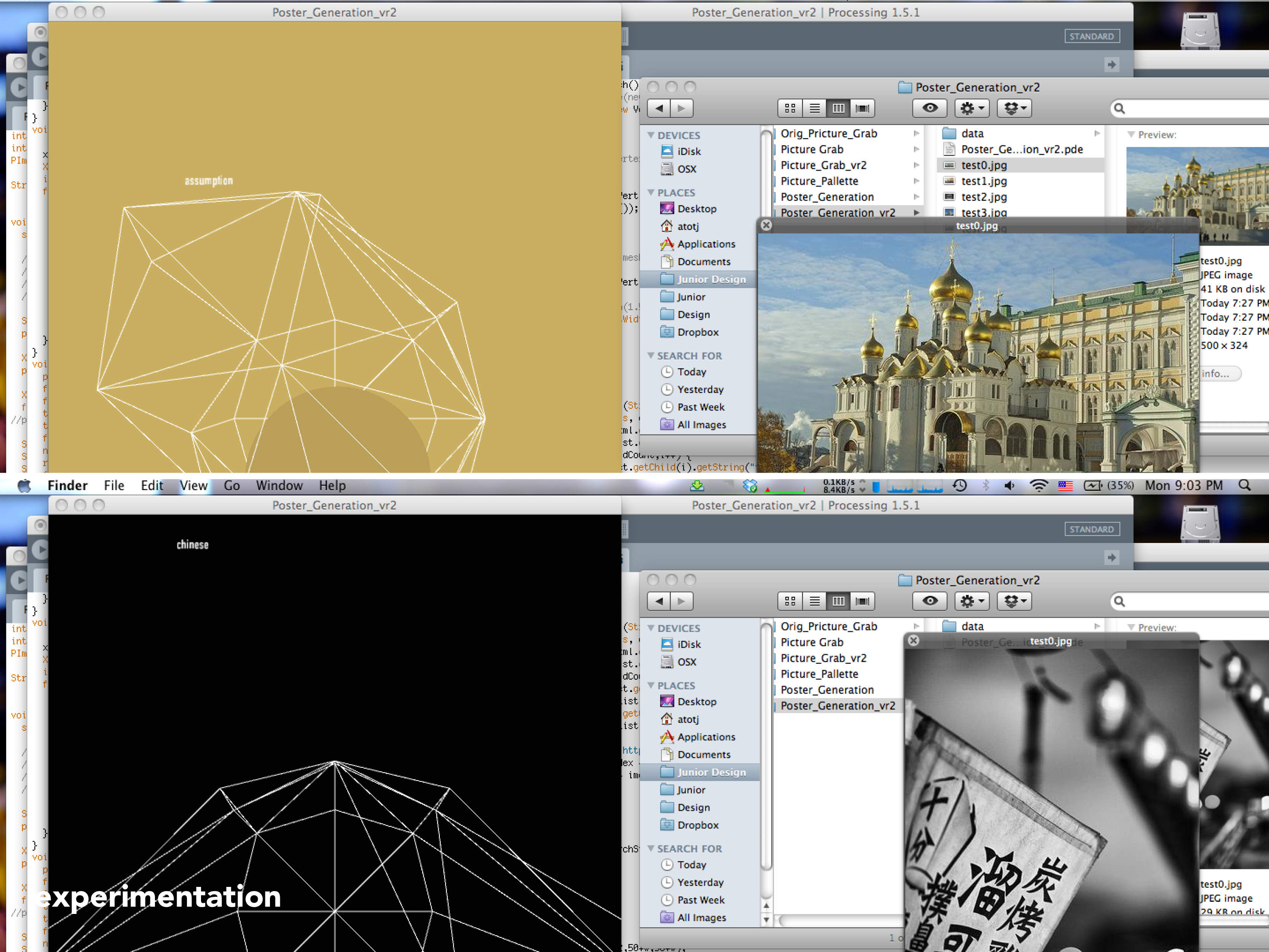

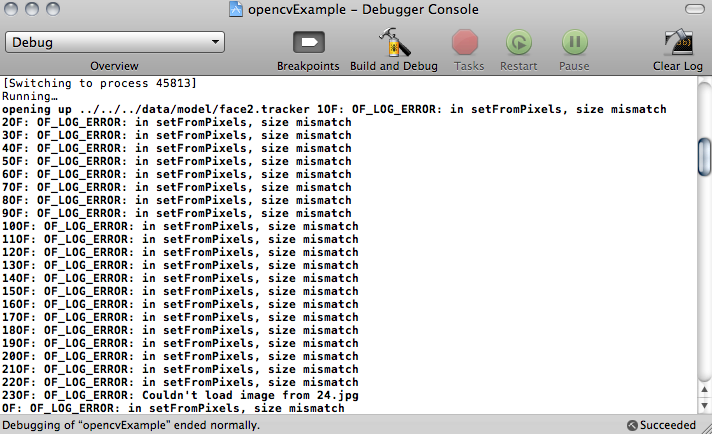

There were essentially 3 major parts I had trouble with: reading the .jpg’s sequentially, getting Face Tracker to read the images, and outputting the plotted points to a .txt file. I ran into a numerous amount of logic errors, other errors that came and went… Essentially it was a hack job of me cutting and pasting, typing in random classes and types into the .h file and throwing them in the main .cpp file with the hopes that it would do something that I would want without making XCode too mad. Most of the time, I was frustrated by my lack of knowledge of the C++ language, severly hindering how much I could figure out on my own.

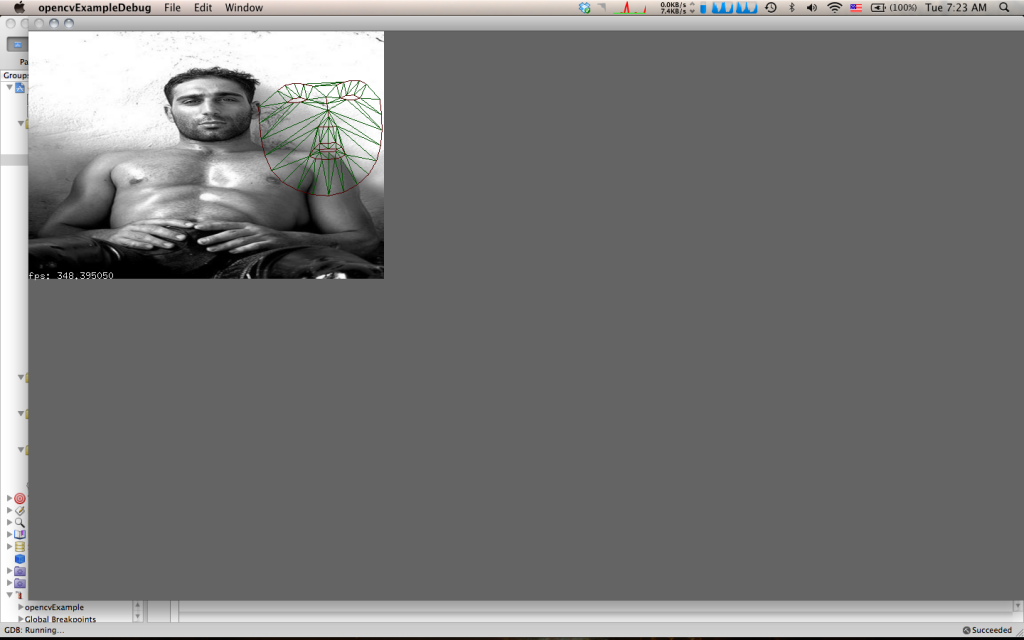

Finally, at 2:00am this morning, Alex and I, (mostly Alex) were able to wrangle Face Tracker to do our bidding and export everything very nicely. Unfortunately, once I threw my images into MatLab, things went to hell…again. I had anticipated this somewhat beforehand, but I really did not know how to work around it: Face Tracker is not wholly accurate, meaning 75% of the time, it would not filter images correctly and find faces randomly in the frames or fail to line up with the faces in the image in the first place. Of course this meant that the averaged face would look nothing remotely human or even like anything at all.