Duncan Boehle – Project 3 Proposal

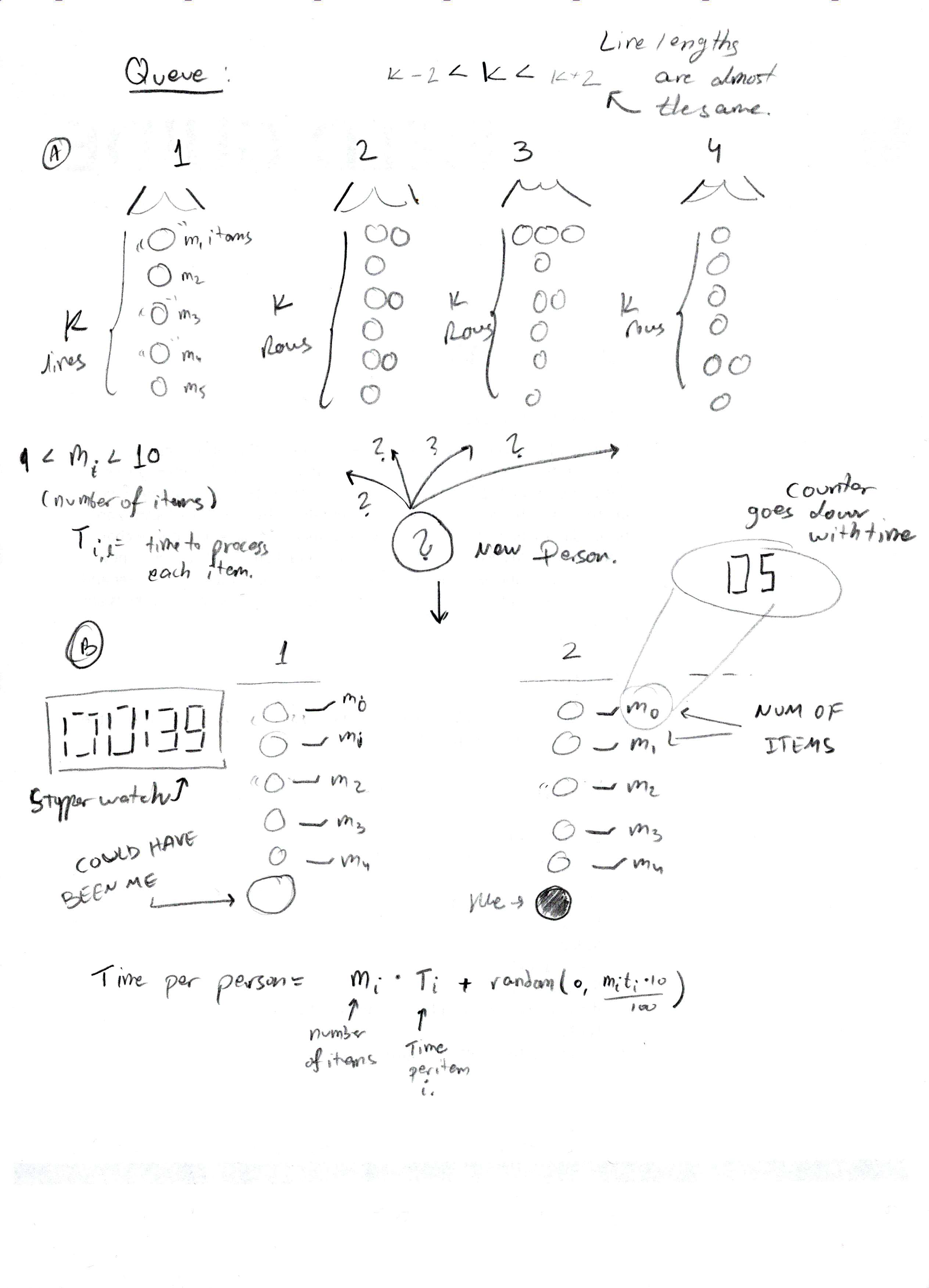

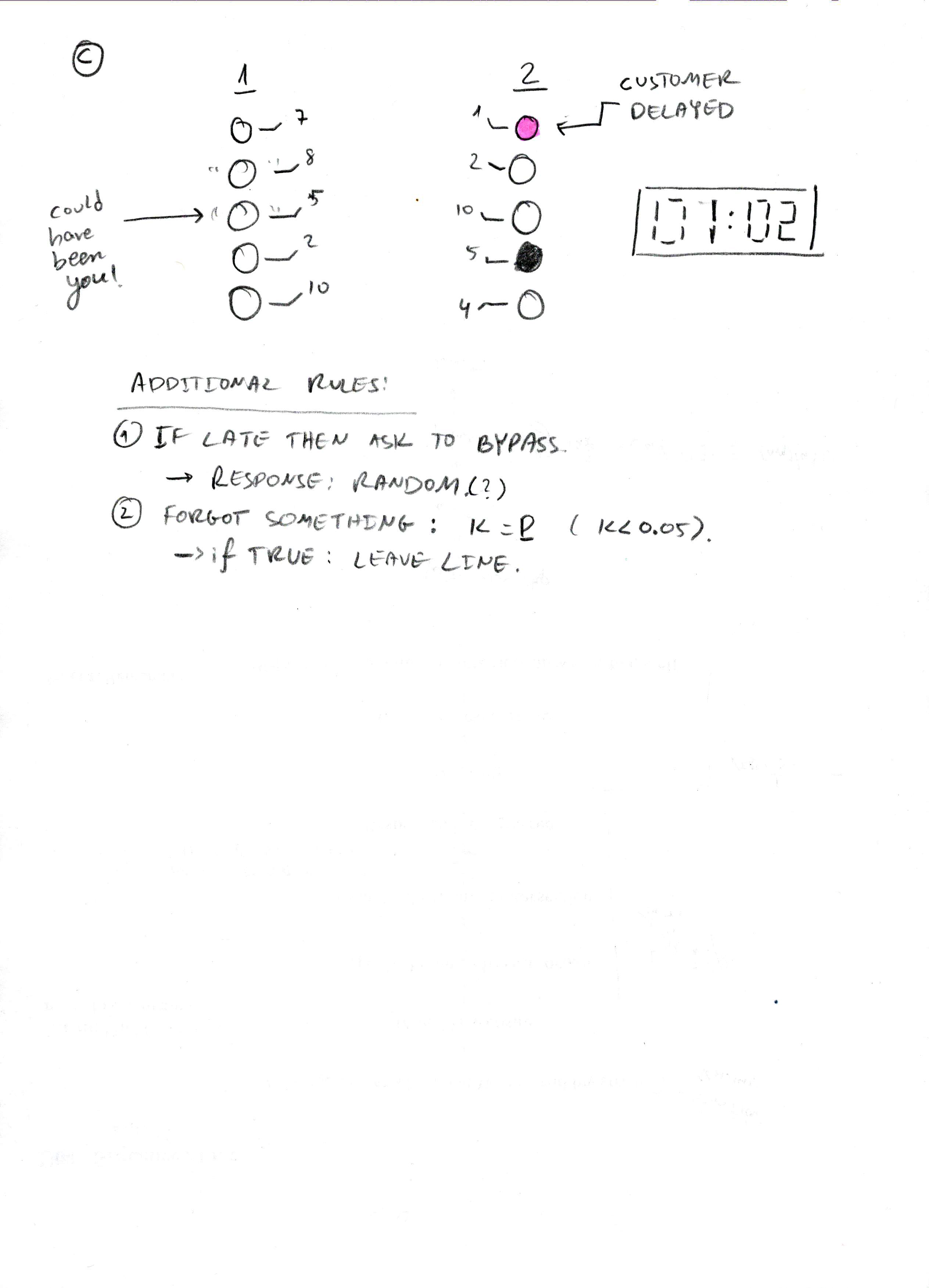

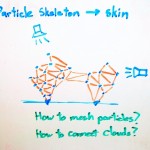

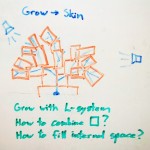

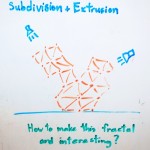

For my generation project, I plan to create a simulation for interactively growing, manipulating, and destroying plant-like organisms.

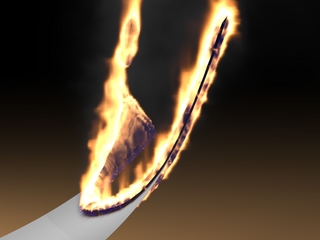

Throughout my gaming history, I’ve played countless games based around the element tetrad – the balance between air, water, earth, and fire. However, what I haven’t seen is an organic, emergent simulation of these elements or how they react with each other in a way that still affects the game. Some of the work of Ron Fedkiw and other graphics researchers have been very inspiring, and I could learn from some of their techniques for combining mesh and fluid simulations that I’ve already programmed. Here’s one paper in particular that’s relevant, along with a couple of videos.

[scribd id=82414078 key=key-2ksb57goml7o9moscuee mode=list height=100px]

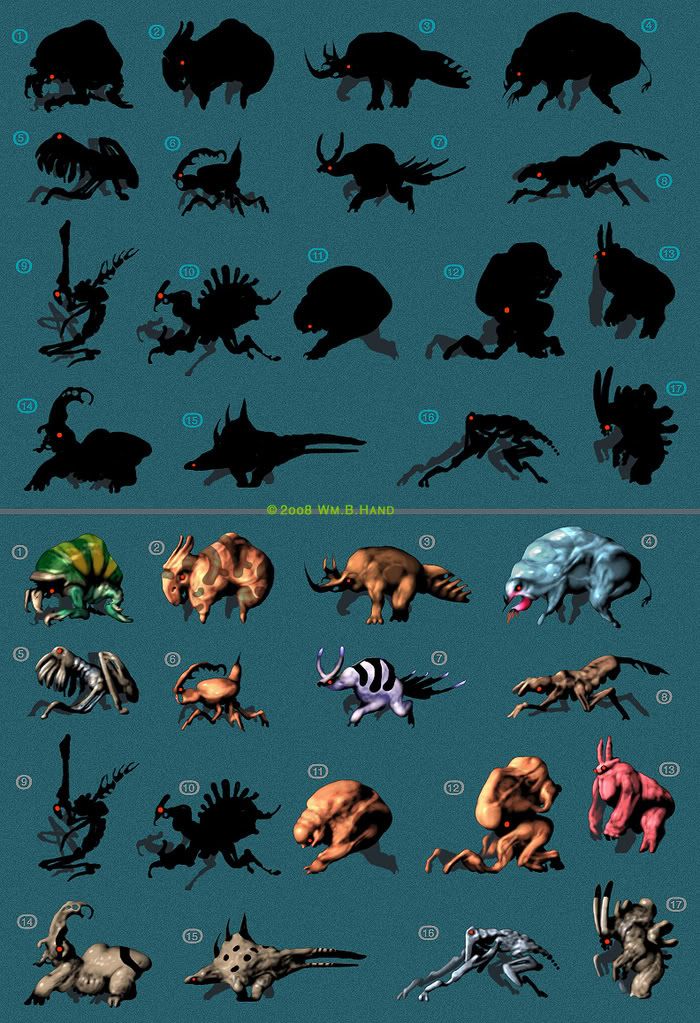

Those exact techniques seem a bit too advanced for the scope of this version of the project, unfortunately. As a first stab at tackling this simulation, I want to just try to stick to plant life. Besides the art in the games from my recent Looking Outwards post, I was also inspired by the mathematics of plant growth taught in a video series by Vi Hart:

[youtube=https://www.youtube.com/watch?v=ahXIMUkSXX0&w=600]

The ideal interface that I’m imagining for this project would be to use a Kinect sensor and have the player’s hands directly guide the growth of the plants. At first, I was hoping to play with the duality between earth and fire, and perhaps one hand could be used to grow plants, while the other would manipulate fire, but I’m not sure this is either feasible or conceptually cohesive. I think it would make more sense to have only the ability to generate new life and accelerate the death of old life, and experiment more with the phenomenon of aging and life cycles rather than just outright destruction. Perhaps I could add more elements later; for example, to see how water can promote growth, drown life, or dowse fire which can bring destruction to plants, but cannot be rejuvenated.

In order to save time for polishing aesthetics and to make the interface more accessible, I plan to make everything in two dimensions, so I wouldn’t use something like Unity for this project. Either OpenFrameworks or Cinder seem appropriate for this project; OFX already has some decent Box2D support along with Kinect support, and Cinder seems to link up nicely with existing C++ libraries. But since I’ve never used them before, I’m very tempted to stick with what I know and use something like XNA with Microsoft’s Kinect SDK. Theoretically I could use Processing, since 2D drawing is dead-simple and it has plenty of Kinect and physics support, and it’s nice to be able to share things online. But if I ever wanted to extend the demo with more elements, any grid-based fluid simulation or advanced GPU rendering wouldn’t be possible.