Hello! I’m Emily.

I started programming by going to C-MITES weekend workshops at Carnegie Mellon to learn HTML. Fast forward a decade, and I’m a master’s student in human-computer interaction at the same institution.

I’m a recent graduate of the University of Rochester, where I studied computer science, linguistics, and music. In this course, I hope to merge the creativity and lightheartedness of my humanities background with the tech savviness and forward thinking of my science background to create some beautiful, useful things. Maybe some not-so-useful things, too.

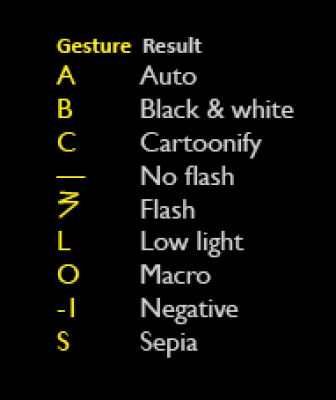

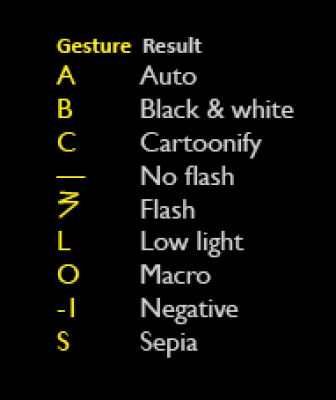

A recent project of mine is GestureCam, an Android app that I developed for the Software Structures for User Interfaces course last semester. I take a lot of pictures with my phone, and I get frustrated when I have to navigate through menus to find the setting or filter that I want, especially when I’m trying to capture an image quickly. To solve the problem, I added gesture recognition on top of a custom Android camera application, and set it up to recognize a few gestures. Now, instead of fiddling around to find the flash button, I can simply draw a lightning bolt shape, and instead of searching for a black-and-white option, I can draw a capital B on the screen. Below is a list of the gestures that my app accepts:

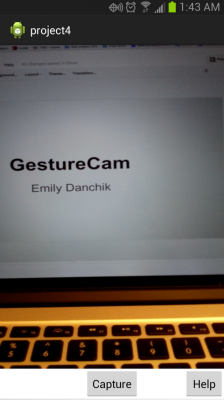

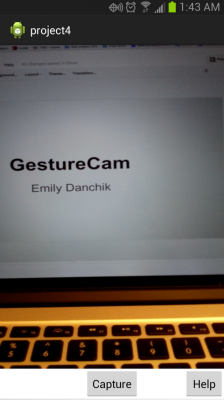

The following is a screenshot of my app running. The majority of the screen is taken up by the camera, not buttons or menus obscuring the image. To change settings, the user draws shapes on the screen. If the user doesn’t know or forgets the available settings, pressing the “help” button will create a popup with instructions. At the time, my camera was facing my laptop, and I took a picture of the presentation I was about to give.

The UI is lacking in style; creating a custom camera app for Android took me way longer than I expected. It’s hard. Someday, I’ll write a screenplay about my struggles.

On a positive note, it worked! Below are some examples of pictures that GestureCam took:

The entire SSUI class, looking surprisingly photogenic.

Our classmate, preparing for his presentation.

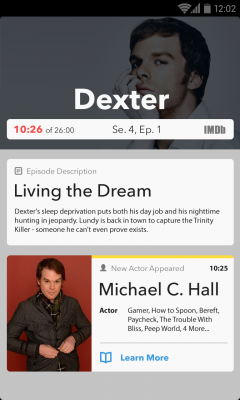

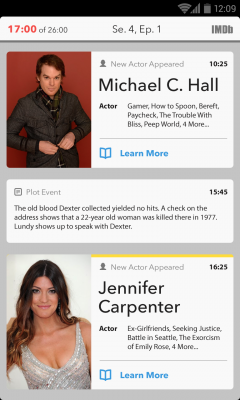

Does this guy look familiar?