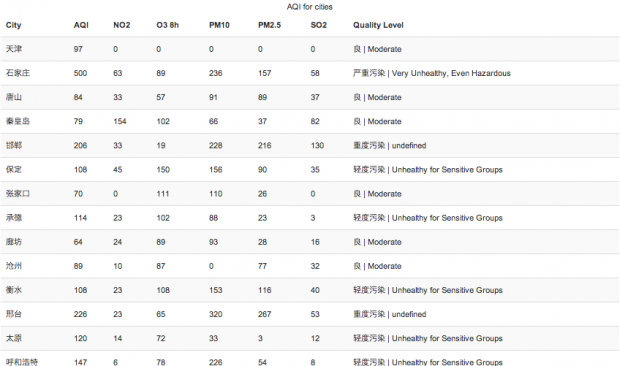

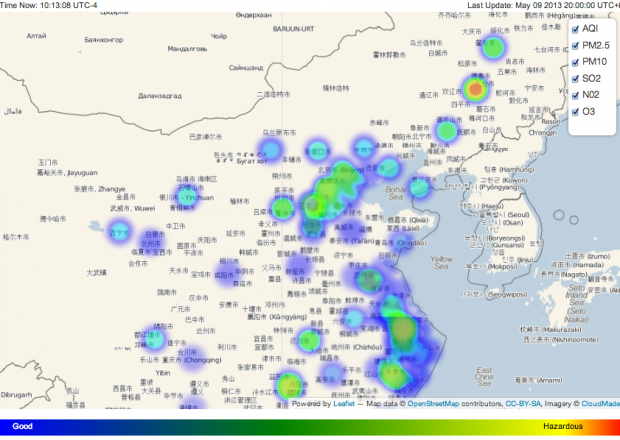

Title: AQI China / Author: Han HuaAbstract: Real time visualization of air quality in China.Showing you air pollution n major cities in China.http://tranquil-basin-8669.herokuapp.com/

Title: AQI China / Author: Han HuaAbstract: Real time visualization of air quality in China.Showing you air pollution n major cities in China.http://tranquil-basin-8669.herokuapp.com/

Joshua

25 Apr 2013

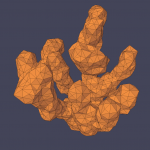

Looking to 3d print and then investment cast.

Marlena

22 Apr 2013

Still got a lot of work to do.Turns out flocking is still hard.

Also, modeling.Repetitive modeling.More hours are needed.

Marlena

15 Apr 2013

I still think I have some hurdles. I’ve gotten a lot done, though.

Here’s what I have: clouds, basic flocking, some models for fish and the ship, and a couple miscellaneous other items and scripts. I’ve been focusing mostly on new modeling techniques, researching clouds and shaders, and animation.

Here’s what I still need to do: more complex flocking, finished models, a few spawning scripts, and more complex animations. Mostly bulk work I think; I need to put in the hours and it’ll get done.

Sam

15 Apr 2013

Most of the last week’s work on GraphLambda has been spent porting from the Processing environment to Eclipse and implementing various under-the-hood optimizations.Accordingly, the visible parts of the application look very similar to the last incarnation.The main exception here is the text-editing panel, which now provides an indication that it is active, supports cursor-based insertion editing, and turns red when an invalid string is entered.

Most of the last week’s work on GraphLambda has been spent porting from the Processing environment to Eclipse and implementing various under-the-hood optimizations.Accordingly, the visible parts of the application look very similar to the last incarnation.The main exception here is the text-editing panel, which now provides an indication that it is active, supports cursor-based insertion editing, and turns red when an invalid string is entered.

Accomplishments

- Eclipse!

- Mysterious crash on long input strings has vanished

- Drawing into buffers only when there is a change so I’m not slamming the CPU every frame

- Working entirely from absolute coordinates now

- Real insertion-based editing of the lambda expression, complete with cursor indicator

- Context switching from drawing to text editing

- “Tab” switching between different top-level expressions

The biggest issue that still remains is distributing the various elements of the drawing so that the logical flow of the expression is clear.Once this is done, the drawing interface must be implemented, including a method of selection highlighting.

TODOs

- Overlap minimization

- Lay in the rest of the user interface

- Selection and selection highlighting

- Implement tools

- Named inclusion of defined expressions

- Pan and zoom drawing window

Keqin

15 Apr 2013

Anna

14 Apr 2013

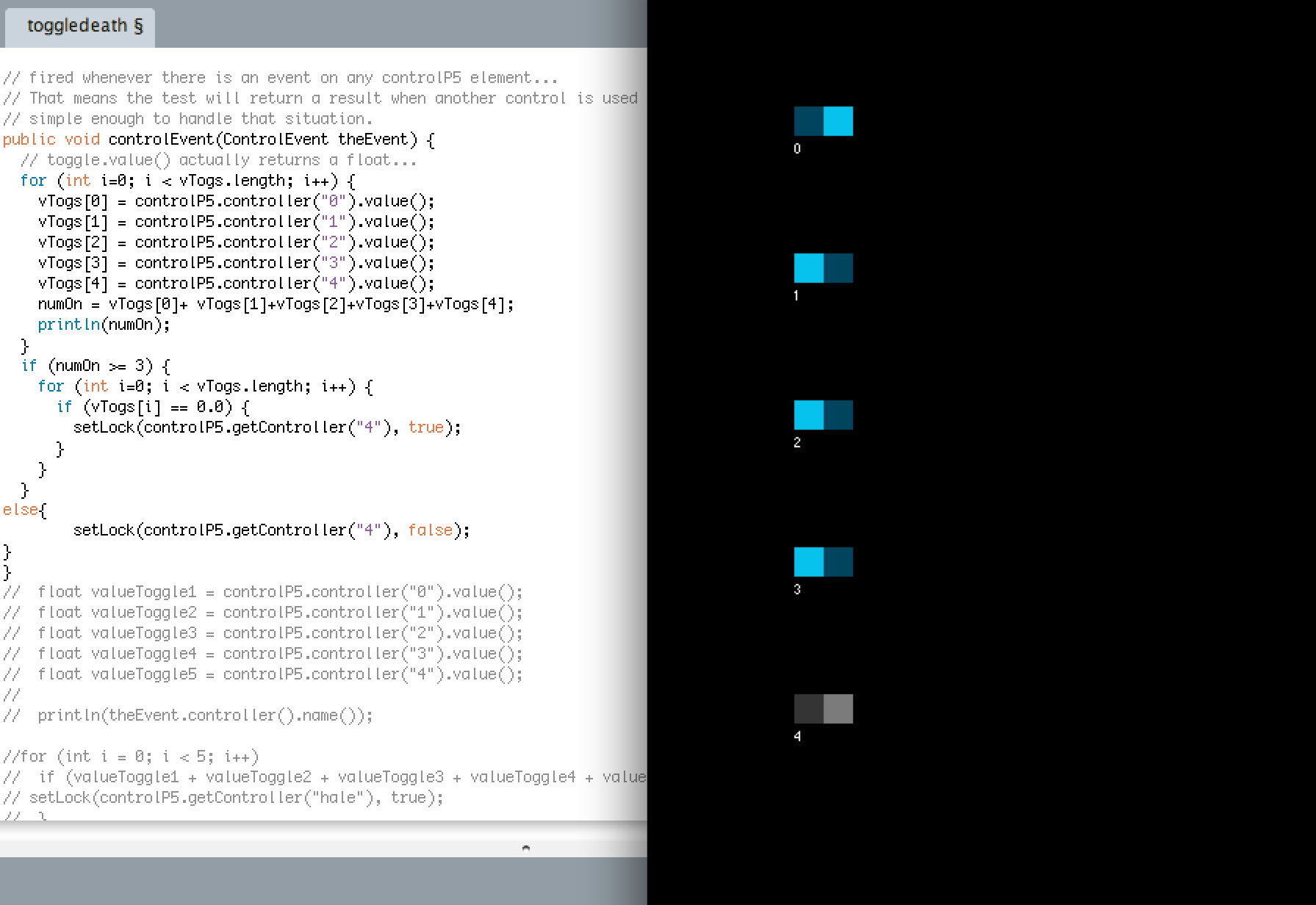

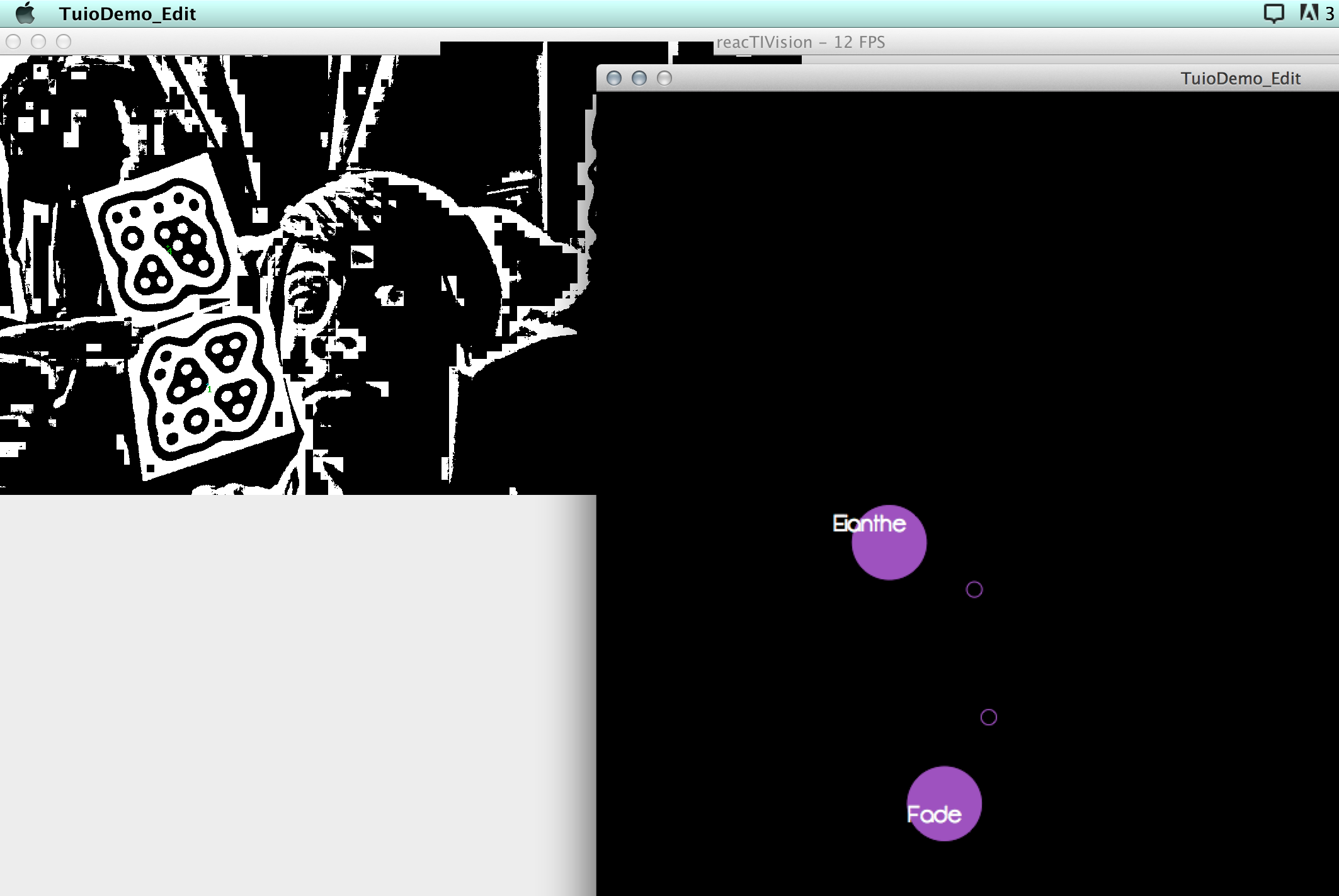

Since you last encountered me, I’ve been working on figuring out TUIO and Control P5, and have a few basic things working, but I still haven’t gotten two basic issues out of the way: 1) How do I make a construct that reliably holds the object IDs for the active fiducials, 2) How do I prevent more than 3 characters being selected at the same time?Both of these issues seem like they should be simple, and solved problems, but I can’t find anything useful on the internet.

In any case, check out some awesome screenshots from my recent tinkering!

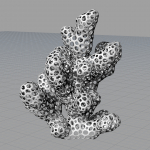

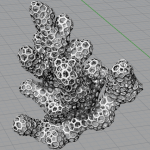

Joshua

14 Apr 2013

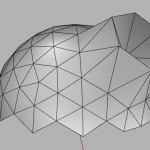

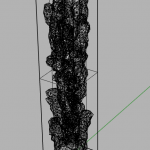

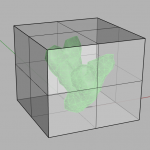

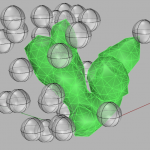

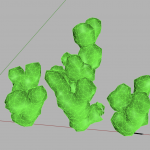

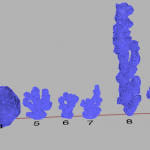

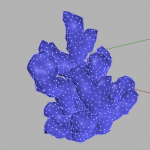

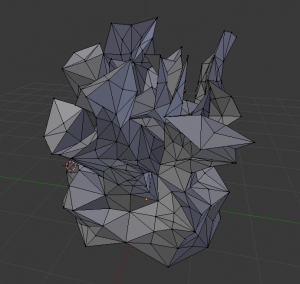

The goal is to ‘grow’ a mesh using DLA methods: a bunch of particles moving in a pseudo-random walk (biased to move down) fall towards a mesh. When a particle, which has an associated radius, intersects a vertex of the mesh that vertex moves outward in the normal direction by a small amount. Normal Direction means perpendicular to the surface (this is always an approximation for a mesh) Long edges get subdivided. Short edges get collapsed away. The results are rather spikey. It would be better if it was smoother. Or maybe it just needs to run longer.

Ideas for making smoother:

- have the neighbors of a growing vertex also grow, but by an amount proportional to the distance to the growing vertex. One could say the vertices share some nutrients in this scenario, or perhaps that a given particle is a sort of vague approximation of where some nutrients will land.

- let the particles have no radius. Instead have each vertex have an associated radius (a sphere around each vertex), which captures particles. This sphere could be at the vertex location, or offset a little ways away along the vertex normal. This sphere could be considered the ‘mouth’ of the vertex

- maybe let points fall on the mesh, find where those points intersect the mesh, and then grow the vertices nearby. Or perhaps vertices that share the intersected face.

Ideas for making Faster:

- Discretize space for moving particles about. This might require going back and forth between mesh and voxel space (discretized space is split up into ‘voxels’ – volumetric pixel)

- moving the spawning plane up as the mesh grows so that it can stay pretty close to the mesh

- more efficient testing for each particle’s relationship to the mesh (distance or something)

This one came out pretty branchy but its really jagged and the top branches get rather thin and platelike. This is why growing neighbor vertices (sharing nutrients) might be better.

Michael

14 Apr 2013

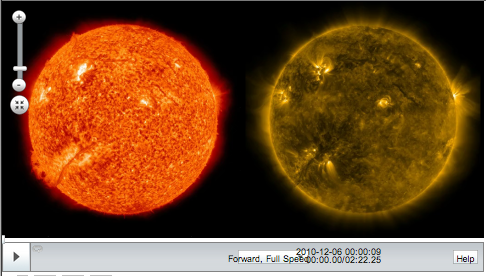

I’ve switched focus a bit since the group discussion on project ideas. I’m still focusing on the SDO imagery and displaying multiple layers of the sun time lapse, but I’ve decided that a more interesting approach to the project is to explore how people process different images of the sun when viewed through different eyes. The first technical challenge to this is to create a way to view multiple Time Machine time lapses side by side. I’ve managed to learn the Time Machine API and I’ve reworked a few things as follows:

A) Two time lapses display side-by-side at a proper size to be viewed through a stereoscope

B) Single control bar stretched beneath two time lapse windows

C) Videos synchronize on play and pause. (Synchronization on time change or position change still results in jittery performance)

The synchronization needs a bit of work still, and then comes the time to work on the interface a bit more to support changing the layers in an intelligent and intuitive way. I need to figure that out a bit and make some sketches.

Keqin

09 Apr 2013

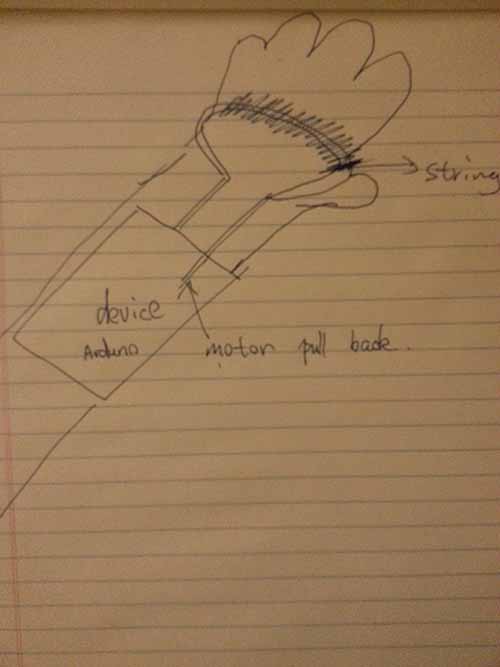

Now there are many Kinect or Computer Vision systems.However, these two systems have a very virtual experience.I think I’m going to give some real feeling experience to the user who uses these systems.Also there are some feedback thing for Kinect/CV system.such as haptic phantom here is a video:

But I think it is a little limited for users.They must hold a pen to feel virtual things in the virtual world.So I am thinking to make a more natural feed back for them.The basic idea is to make a wearable device on the back of the hand and when push to something.It will give you some feedback.But it may not be stop your movement.It may just tell you: hey there’s something in the front of you.I’m thinking to use motor to be the engine for feedback part and kinect to detect people’s movement.Just a simple haptic thing for kinect and later maybe more complicated exoskeleton thing which Golan told me.