SankalpBhatnagar-PlayingWithFire

Hello, this is Playing With Fire

Concept: I wanted to generate an interactive 3D Flame. I haven’t had much experience with 3D programming, let alone using the types of technology I wanted to use, so I knew this would be interesting.

Inspiration: Have you ever seen fire dance? Well, I have and…

[youtube https://www.youtube.com/watch?v=lbL29DFoGfM]

…now you have to. Videos like this had always intrigued me simply because of the way the flames moved. I don’t know, maybe it was the elegant movement of such a powerful force–fire.

Either way, I wanted to explore a new field of interactivity for this project, so not really knowing my way around the majority of interaction-based programming libraries, I delved into AR toolkit after seeing images of people making cool floating shapes or figures magically appear on screen like this:

But how could I make something like this interactive? Could I make something look good?

Concepts: Over the last two weeks, I had been playing around with ideas involving “light” interactivity or possibly “iPad” interactivity, but none of those ideas ever really found a feasible groundwork to really take off. However, when choosing between Kinect, ARtoolkit, and Reactable, I found the assignment to be more managable (Thanks Golan!). While the Kinect is something I’ve really wanted to play with, I knew that I needed something I could actually manage, in terms of scope, rather than somthing that was just me playing around with cameras. Additionally, Reactables didn’t really interest me, so I decided to go with ARtoolkit.

I wanted to make a flame that responded to sound. An audio campfire. A microphone based fire. To me, this is interesting because of its implications and possibilities. When I think of a campfire, I think of personal reflection. I personally feel that deep reflection and meditation can sometimes be ruined by the noisy environment we live in. So I planned to make a fire that would be diminish if the sound in the user’s environment reached a certain threshhold.

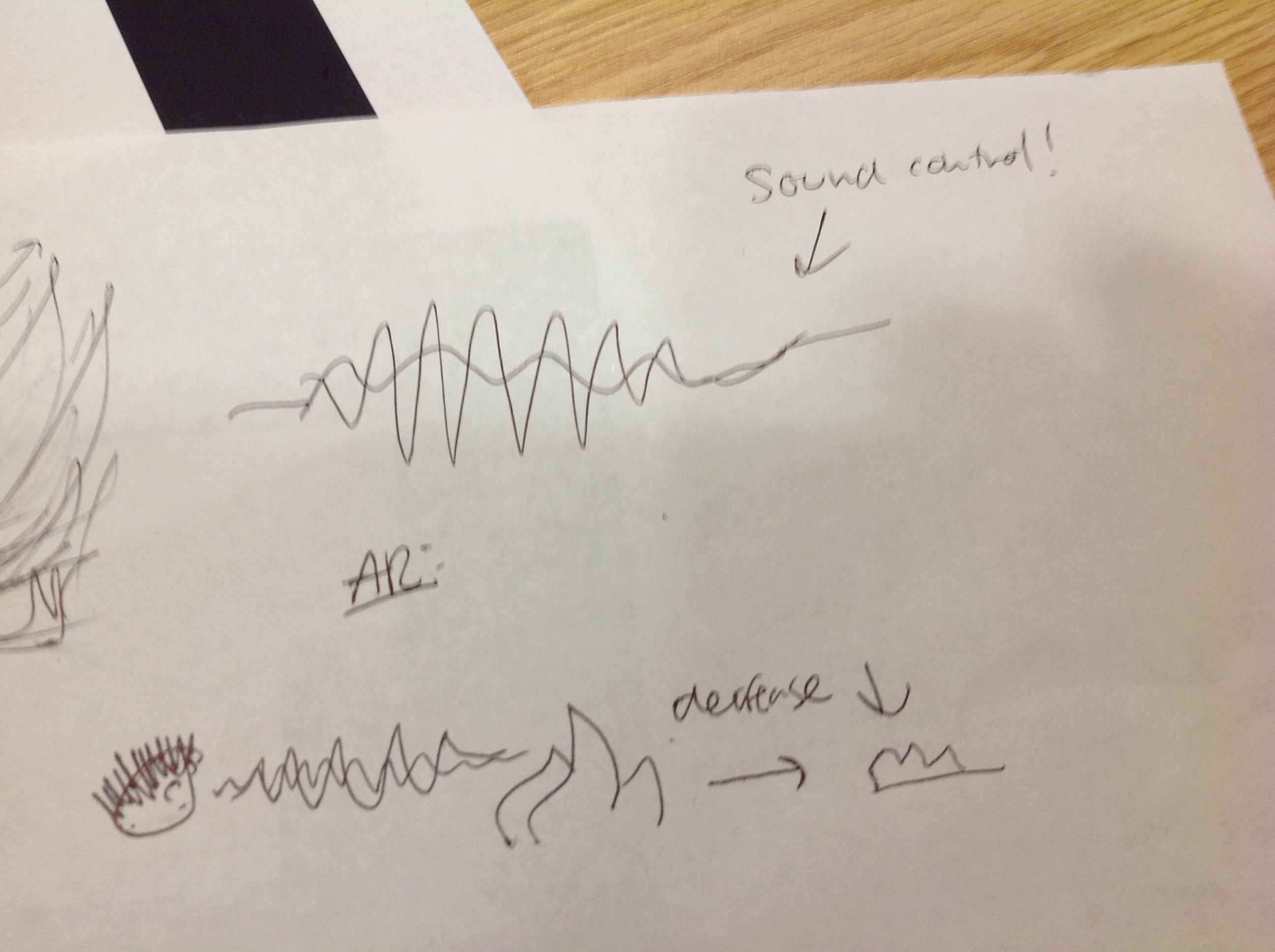

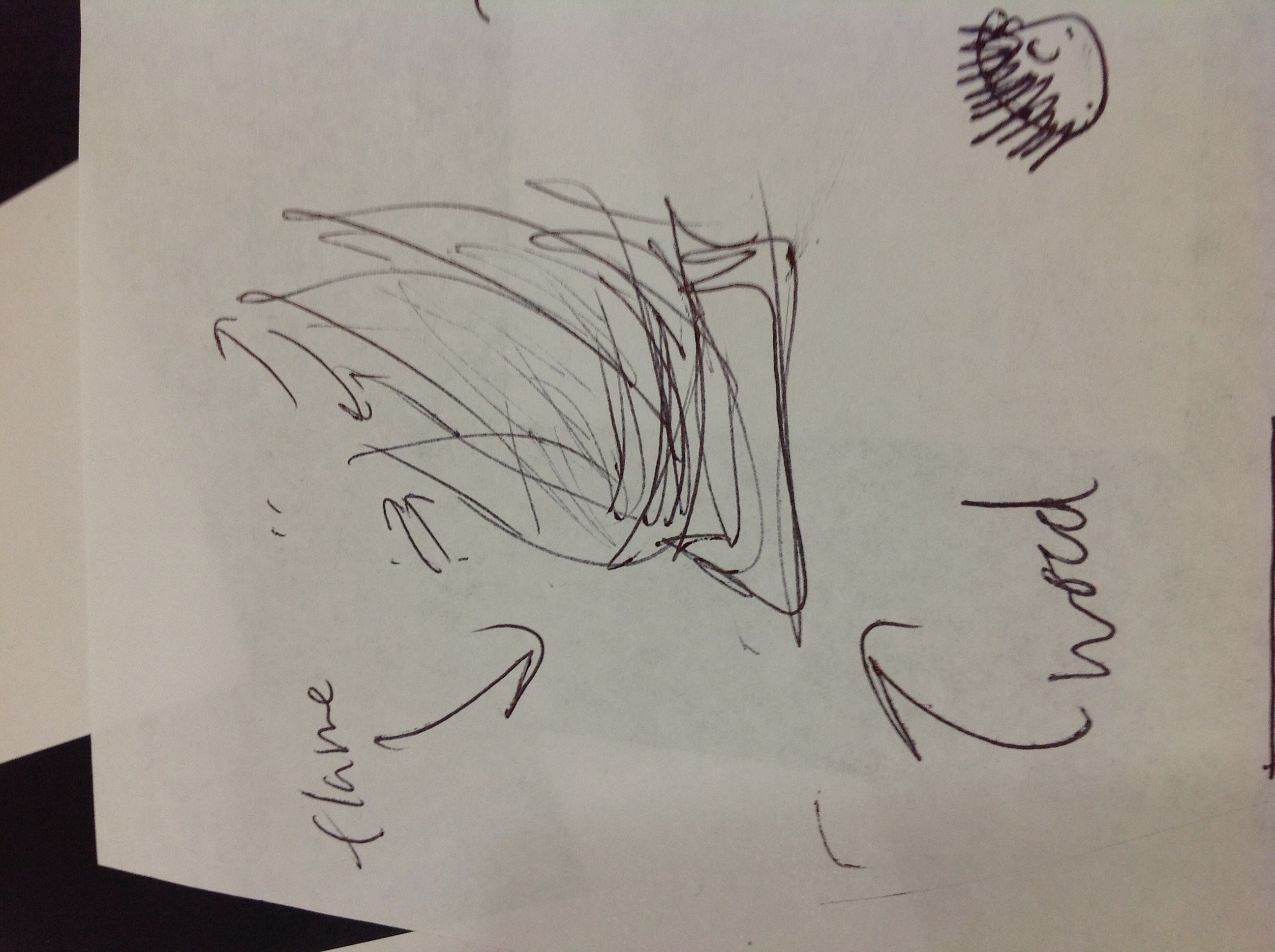

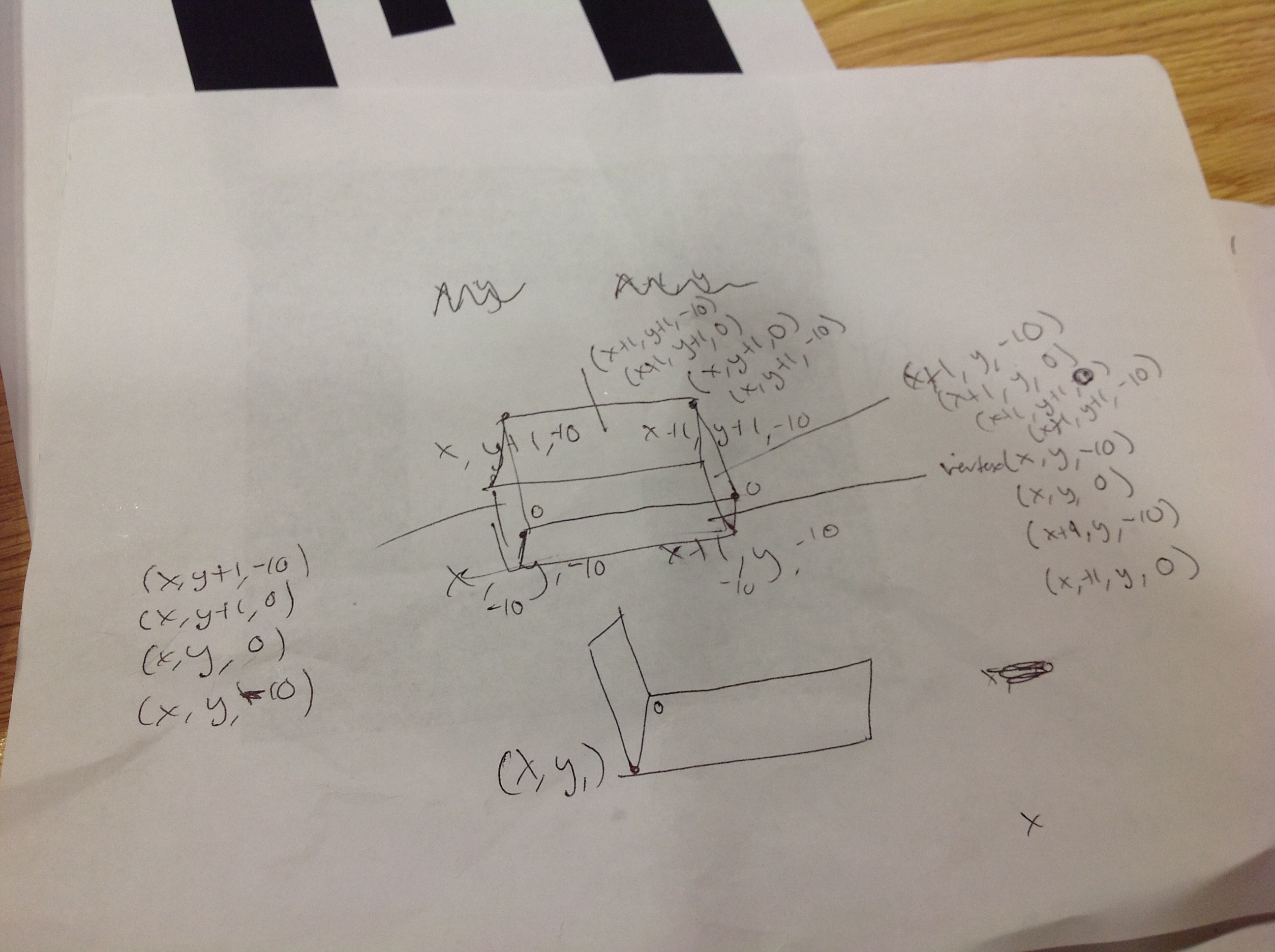

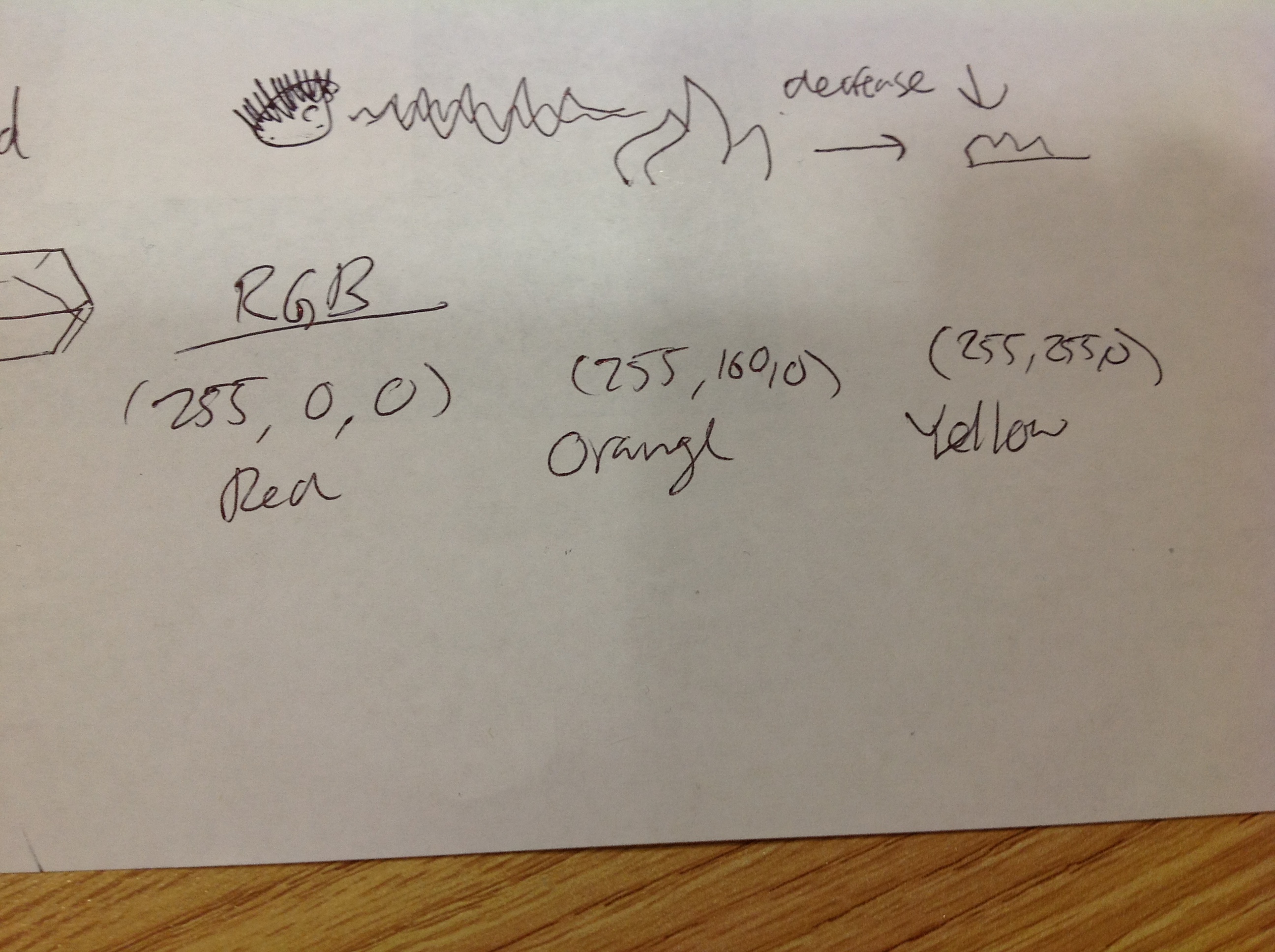

Some early sketches of what I wanted to do with this “flame” idea are below.

Initial Work:

Working with AR Toolkit, for me, wasn’t as smooth-sailing as I thought it’d be to get everything going. I spent numerous hours just trying to figure out how to run the damn thing, and it was a bit frustrating. However, after I got the gist of it, it became manageable. I mean, I really had no prior experience with this sort of thing, so I played around a lot with general tutorials and shapes and devices using my Macbook’s camera. Here are some screenshots:

Pretty interesting stuff happened when I began working with the ARToolkit and Processing. For instance, the AR objects would suddenly fly away from the AR marker—so you know, that was a thing I had to deal with. Additionally, the camera kept reading my white iphone (and black screen) as an AR marker, and sometimes I’d get AR objects just breaking through my screen.

Eventually, after several, several hours of getting kinks worked out and really exploring the libraries and source of the AR Toolkit package, I was able to get a fire going. After I got the fire going, I worked out the math behind making the “logs/wood” underneath the flame—so as to give it a genuine flame feel.

But the most important part to me, was the sound—how could I get sound to be interactive? Well as you may have seen in my sketches, I wanted my flame’s maximum height and noise patterns to be directly affected by my microphone. So I used this cool library called Minim that can use the sound from your stereo mic and convert them to numbers based in an array of the sound pattern in Processing. Sound cool? Good.

Demonstration:

This is what I came up with. Notice the flame reacting with the sound intensity of the song being played through my speakers. Making the allusion of “dancing” with the beat of the music.

[youtube https://www.youtube.com/watch?v=0s7F8WNPtHE]

Going Forward:

Yes, there’s more I can do with it and I plan on it. I would like to make the flame more realistic. I really want to get several flames, with different coded structures with varying reactions to sound, and line them up and play the song again. The only reason I used music on this was that I didn’t have multiple people around me to engage in a conversation that would make the flame react appropriately. Imagine sitting around this fire with glasses that could view the AR toolkit. Illumination when there is peace and quiet, and darkness when it get’s to loud. A perfect place to sit and reflect.

Conclusion:

My programming skills have been really pushed throughout the semester. Often times, I get too many creative ideas and don’t know how to implement them in proper syntax. I know my work may not be the most awesome interaction ever, but even if it’s a botched experiment to some, I feel it is a well documented exploration into a programming territory I didn’t even know existed a few weeks back. To be honest, I’m extremely proud of my ability to stick with this AR Toolkit stuff instead of giving up. I have a long way to go, but I truly feel that IACD has really given me some invaluable lessons in programming, life, and creativity.

Things I learned include:

– AR Toolkit is serious business.

– Creating shape classes is mad useful.

– Perlin noise is AWESOME, but tricky.

– Don’t give up; take a break, come back, and work.

– Never underestimate your code.

LETS see it! There is alot of hypothetical talk…

+1 Perhaps show more, gab less.

The final product looks like it is pretty cool (the video quality doesn’t do it justice). The concept is not super complicated so we don’t need as much introduction (too much talking).

Presentation too dark; too many words (nervous much? relax!); poor frame rate (3 fps?!). How can you address these?

I like relation of the audio’s island vibe to the volcano effect! AR tiki torches

Sankalp, you need more practice making economical presentations. A lot of words, but not much content; you’ve stated 5 times that you want to “make interactive fire”… so… get to the content faster. Next time: try showing the results first, explain after.

Might be better to show the art then talk us through the process, it’s very abstract right now (~4:00 min in)

+1

Would be nice to incorporate some sort of perlin noise to help make the fire more natural looking

Not sure if this is relevant, but for a physial take on sound-responsive fire, you might want to look at this: http://en.wikipedia.org/wiki/Rubens%27_tube

There are a lot of ways you can represent sounds as numbers… is it just the amplitude of samples or is it related to frequency (FFT)?

It looks like you’re using the amplitude of a particular sample of the audio to determine the height of the fire. You might want to average over a larger number of samples if that’s what you’re doing…

By having the background completely dark, you are missing the “Augmented Reality” part of the ARToolkit..<--agree You could have done just the animated fire. How does that connect to the envinronment? It seems like you're doing some complicated process to compute the shape of the fire, but it might be faster to have a lot of randomness to initially generate the flame, then supress it using the sound. The simulation would look much more flame-like if it were going at 30fps. Flamin! Hot! Had you considered making the flame shape fractal, so that the music was affecting tiny flames on the outside of the larger flame? Might contribute to a more realistic shape. I like the idea; it's a bit like a new take on those old video tapes you could get that were supposed to make your TV look like a fireplace You learned alot with the project