MahvishNagda-Project2-TasteScapes

My goal with this project was to visualize data that is not visual. I was inspired by a project by Kate McLean called Sensory Maps where she created taste, smell, touch and see(ing) maps of Edinburgh (link). I specifically wanted to work on visualizing smells of a place primarily because that would be personally meaningful to me and also because I think smells are associated strongly with place. I wasn’t initially sure if this is a good direction (as far as visualizations go), but I found out about synaesthesia (link & link): a neurologically based condition in which stimulation of one sensory or cognitive pathway leads to automatic, involuntary experiences in a second sensory or cognitive pathway. So synesthetes smell or hear in color. Famous synesthetes include Wassily Kadinsky & Richard Feynman. So this provided at least some basis (however vague) for this kind of mapping. Also, smellscapes are an interesting area with a lot of research (about the nose, etc). So despite the challenges, this was a pretty fun project to work on.

I initially wanted a way to visualize smells and have the data and visualization be auto-generated from data online, but smell data is hard to find. So I figured I would interpolate it from other sources: restaurants, landmarks (or smell specific landmarks like grass), and possibly people generated data like twitter feeds on smell. I could then use some simple simulations of wind (or something like wind) and map out the smells. The final visualization would be some version of a topographic map with colors associated with each category of smell (chinese food, grass, etc). So this initial goal was definitely too ambitious.

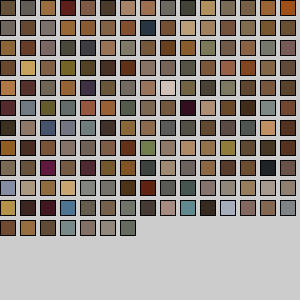

Because I was planning on playing with lots of data, I figured I needed a way to map a category of smell to a color. To do this, I played with getting images from a Flickr search, getting the dominant colors from a set of those images and averaging those together to get a representative color for that term. So I did initial tests just by downloading images from the site search for Flickr and Google Images and found that Flickr gave me better results. I had overlooked issues about copyrights when using their API, so the image results were not nearly as good. At that point, I had to scrap the Flickr script and grab data from Google Images: which had deprecated their API, so I had a limit on the number of images. To get the representative color, I also looked at trying to use the histogram in some way: probably too naive. I added all the histograms and then tried to use the peak colors: that didn’t work at all. I also ran into issues with HSB in Processing and had to move to RGB even though calculations for hues are better (even if you’re off). Eventually I ended up using the averages for each image and averaging that. The result for a few terms was pretty good: for each color I did get the color: e.g. green, yellow, pink. I got yellow for happy, red for anger, brown for coffee, another brown for poop, pink-ish for french fries (ketchup + fries), beige for chinese food. So I figured this was good enough. Uniqueness is still an issue, but I thought this mapping was interesting enough and let me scale. I also planned on having the visualization be interactive , so the smell category would show up when you hovered over some “smell”.

Apart from color data, smell data was pretty much my primary challenge. I spent a lot of time looking at different data sources and evaluating how useful they would be. I searched geotagged twitter feeds for something like smell and got results like “smells of jealousy”: which was interesting but not for this visualization. I figured restaurant data would be easy to find especially when we see it everywhere: but all the APIs I looked at had strict limits on how many requests I could make: which made this task infinitely harder. Also, I also wanted categories with restaurants and not all the APIs had that. At that point, I decided to ditch the smell idea and just play with Taste. I initially looked at a bunch of APIs: Google Places, Yelp and FourSquare. Google Places did not have categories for the places, so I decided not to use it. Yelp has great data but only allow 500 requests a day and a max of 40 results per request. I learned this only after I had scrapped the site for about 10K restaurants in Manhattan and realized some of them were missing. At that point, I had to go back and get data from FourSquare: their categories aren’t as great and the data is also not as clean, but it was the next best option. It has a limit of 5000 requests & 50 results per request. I was able to get 25K restaurants and 172 categories. The script I wrote went through tiny grids in a bounded location with an approximation that there would be less than 40/50 restaurants in that grid. With the foursquare data, the “Food” category also had things like Yoga Studio so it needed to be cleaned out.

Finally, once I was able to do that, I had to write a script that would take that data & map it onto a map. I used TileMap for the background image and also looked at CloudMade (but I couldn’t export the map/tiles there). I initially used Till Nagel’s Mercator library to map the points, but realized the visualization is pretty much pointless without an interactive legend, so ported it to the Unfolding library. I wasn’t able to make the legend interactive (so you can select a point and have the matching tastes show up), but I am pretty close.

Map

Colors

So overall, this project was slightly a disappointment. Maybe with a little more time, I could get more interesting information from the dataset, but I wasn’t able to get what I wanted and my hunches about colors didn’t really pan out correctly. Things turned out very differently from what I had initially thought they would. Although I wasn’t happy with my final product, I learned a LOT during this assignment: primarily process and getting good data. I used a lot of tools also: Processing JSON library, FourSquare 2 API, OAuth2.0 library for Processing (Scribe), Yelp 2.0 API, Flickr API, Google Images API, Open CSV, colorlib (toxiclibs), Color Summarizer API, Unfolding library, Till Nagel’s Mercator Map.

Quick Demo of Interactive Map

https://www.youtube.com/watch?v=YBD6capBvnU

======================================

Mahvish

wow- seems like you learned a lot about available restaurant APIs :P

** Seems like you learned a lot, period!

does the color of the dots relate to the categories of restaurants? (AH HA you just showed a screenshoot that it does)

this should be reflected in the key on the right!!

choose colors yourself based on genre; or just pick them equidistant from eachother on the hue scale; maybe based on longitude/latitude since presumably different world styles will be more similar in taste (and then in color). Maybe filtering would also help, like being able to turn off/on different categories. I thnk you have enough data, block-level is fine when you’re showing the entire city. You’ve done a shit ton of work, wow.

I agree with the others. it will be better if colors are associated with different characteristics of restaurants.

It seems like you have too many categories for the colors to be recognizably different

** I agree with what you said about clustering. It would have helped to learn some interesting things from the map.

I think you’ve done quite a lot in terms of data gathering, I think if you used smaller dots and lesser categories, like italian, mexican you could plot them on the canvas and see if it painted the picture of manhattan, this would give you the sense that italian restaurants are all over or not in some area.

What about associating the colors with people’s perception of the food? Ask some people the color they associate with certain food, and average that? That might have taken you back to understanding something new, like the place where you started from – smells that you cannot sense.

maybe the scale of this makes it hard to understand… i would like to see you try a smaller area (maybe craig street, maybe pittsburgh) so you can actually connect the dots to specific locations; and maybe even locations that we know

I can empathize with the difficulties of running into barrier after barrier! I find it interesting how many different methods you explored to obtain your vision for this visualization. The colors are pretty tough. I also like how you know what went wrong and what you would do to fix it.

Olfactory stuff in general is really difficult!

Are you aware of “digital nose” sensors?

I know you’re bummed that you went down multiple dead-ends, but you had a lot of learning. Now you know what’s difficult! :) Part of the function of this class is to develop an intuition for how to create and solve problems… this is how you get it.

Year, you can’t use 175 different colors for 175 different categories. Our ability to read charts is limited to about 5 +/-2 categories.

THIS! Really should be able to go down to about 10-20 at least.

You have to learn how to get around API limits. There are ways :)

I think visually it is pretty successful. It looks like you have some selection problems. Also it is clear from your explanation that your original idea didn’t pan out but I think you were able to recover pretty gracefully. I think projects often change over the course of their development and that is great.

It seems like you noted it. But it is a bit odd you have these specific colors noted for each restaurant, but there is no visual key on screen to read the colors.

Good to hear process, but would also be great to emphasize solutions and achievements, should they be present. Even if its the solutions for next time.

I think you’re selling yourself short on the value of your mistakes. Knowing what doesn’t work and developing an intuition for blind alleys is a useful thing. I found myself wishing that there were more pictures of all the things you described earlier. Whether it was just screen grabs highlighting why the data didn’t work, or actual visualizations that did not come out as expected, I would have liked to see more images than text.

I think the original idea of visuallizing a sense that’s impossible to see is a very interesting premise. It’s unfortunate that there were so many difficulties getting to the final product, but as everyone else said, it’s great that you learned so much.

**i agree, maybe color is not he best way to substitue the sense, maybe another sense like touch would be better

…wait where are these “happy or sad” words coming from?

It might be interesting to make a “food color map” of the world. What if you colored mexico with the average color of “mexican food” [images], colored korea with the average color of “korean food” images, etc. It might work!

**yeah I think cutting ties with your original idea would have been okay. It feels like you didn’t really embrace your new idea because you were convinced that you had failed in your first idea.

You probably want to put more images into your blog post/presentation to break up all the text.

Check out Toxilib’s color library for processing. It will basically give you a bunch of harmonious colors and you can ask it for ones that are more distinct than the ones you’ve

chosen for a more interesting/revealing map. And I agree that you should definitely narrow

your categories of restaurant so its more individually revealing from the zoom out.

I’m a bit confused by the grouping of colored circles. I know what they represent, but it all overwhelms me. Also, numbering the categories is a bit distracting.

Using so many color spaces doesn’t seem to be in your interest, especially if the color correlates to color of food.

The final product is attractive but not particularly informative. You are using way too many colors for someone to keep track of. Maybe you could have grouped all the restaurants into 8 or less general types or ethnicities. Also, Manhattan is going to be a big melting pot with every kind of restaurant present. It would be cool to have produced a comparison of Manhattan with a more homogenous city.

zooming into the areas or streets would be good.

In another iteration it’d be nice to be able to show/hide different cuisines so that drilling down oyu’d be able to compare just 1 or 2 cuisines +1

This great. However, if I could get a clearer sense of the transition from the data to the visual.

Maybe add in a part where the user can input a more specific area they would like to go so that it narrows down the selection so more detail can be viewed.