Project 4_ Cutback Remix_Deren Guler

This is a new version of a project that I started a while ago, but haven’t had time to really push through. It’s pretty pointless, but I am extremely amused by household objects that respond to you. Chambers Judd has a series of small projects about giving inanimate household objects emotions, such as the “antitouch lamp” that backs away from you and the sneezing radio that cleans itself. Some motivation to keep going include blendie and noisy jello

I set out to make a cutting board that responds to you when you cut on it. The first version used 4 piezo sensors under a layer of acrylic that activated played a tone programmed using the arduino tone melody. Basically, the more you cut on it the more of the song you would play. Another version used the arduino Audiuno library and homemade force sensors acted like input to a synth, allowing you to make crazy noises while you cut. I got some interesting feedback from the initial attempt. I thought that the cutting board should respond in pain to being cut on, the way a human would, but many people said they would prefer if it made a more pleasant noise. Then came the question of “what if the cutting board knew what was on it and responded accordingly?” I have yet to think of an easy way to sense raw meat vs vegetables, or a really practical reason to try and figure it out so if you have any ideas please let me know! (The only thing I would want to do it make a cutting board that knows when you are cutting a vegetable and then fills with blood looking liquid, or squirts it at you).

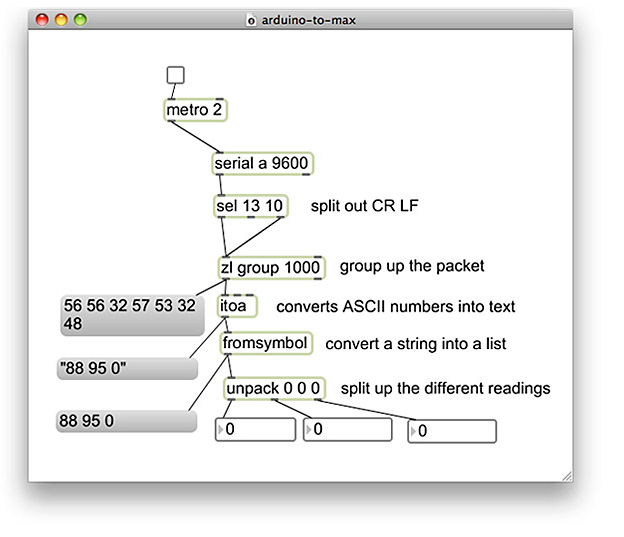

So! in this version I changed the design of embedding the sensors (because the last one broke after less than an hour of use) and decided to try and interface with MAX/MSP. I used a Serial patch in MAX to read the analog input data from the piezo sensors in the cutting board. I also decided to try and stick the sensors in the wood, rather than sandwiching them between two layers of wood. I experimented with pouring epoxy on some milled out channels in the wood with the sensors in the channel and found that they still had some sense. I was pretty excited about this, because I think the resin, wood embedding mix can look really nice if you do it well. I’m still working on that part, but I think the latest version is not so bad. I also experimented with where to put the sensors because I wanted to be able to differentiate between chopping, vs. slicing.

Here is the arduino code for detecting the different kinds of cuts with the previous board:

void setup() {

Serial.begin(9600);

}

void loop() {

//read sensors data

int sensorValue1 = analogRead(A0);

int sensorValue2 = analogRead(A1);

int sensorValue3 = analogRead(A3);

int sensetotal= sensorValue1+ sensorValue2+ sensorValue3;

if ( sensetotal > 200) //middle sensor, pounding

{

Serial.print(sensorValue3, DEC);

Serial.print(" ");

}

if ( sensorValue2 > 0) //end sensors, slicing

{

Serial.print(sensorValue2, DEC);

Serial.print(" ");

}

int sensorValue1old = sensorValue1;

int sensorValue2old = sensorValue2;

int sensorValue3old = sensorValue3;

}

and for sending data to MAX/MSP:

the MAX patch is adapted from a tutorial by Jason Safir

I have the slicing input playing a weird violin file, and the chopping playing some bongo drums. The nice part about the MAX/MSP set up is that you can easily switch out the sounds, maybe a child could mess around with that part and the mom could play the sound while she is making dinner? This would require some bluetooth action, but I think it is doable once I get a little more comfortable with serial communication and MAX/MSP.